0 引言

建筑物作为人类生活环境的重要组成部分,体现了当地社会的经济发展状况,在遥感影像中提取建筑物区域能够为城市规划、社会经济发展等方面提供有力依据和基础地表信息,因此精准且高效地提取建筑物是遥感信息处理与分析的重要研究内容。

当前,遥感建筑物提取可分为传统方法和基于深度学习的方法2种类型[1]。传统的建筑物提取方法主要有阈值分割法、随机森林法和支持向量机法等。吴炜等[2]综合分析了光谱和形状特征,提出的建筑物自动提取方法获取了较高的识别率和较低的误识别率; 贾士军等[3]基于统计理论框架利用融合图像的颜色和纹理信息,实现了图像的分割和建筑物目标提取; Lagunas等[4]先独立提取建筑物的角点,再基于图像的模式匹配法进行角点匹配,从而实现建筑物的特征点提取。然而,以上方法都需要一定人力的特征设计和先验知识,受人的主观认知影响较大,还存在时间成本大,效率低下,人力财力资源消耗大等问题,面对广泛复杂的建筑物属性及周边环境依然有明显的局限性。

近年来,随着深度学习的快速发展,其强大的特征自动提取能力被国内外众多学者所关注,并在图像分类、语义分割和目标识别等领域取得不俗的成果。以深度学习为代表的人工智能新技术为遥感技术开辟了一条新的“学习”渠道,达到了较高的准确度[5]。基于全卷积神经网络(fully convolutional networks,FCN)[6]的语义分割方法是当今遥感影像建筑物提取的主流,通过对影像中每个像素点逐一划分对应的类别完成对建筑物的自动提取。U-Net[7],Unet++[8],SegNet[9],PSPNet[10],Deeplab系列[11]等基于FCN的经典网络也广泛应用于建筑物提取领域,并取得了显著效果。季顺平等[12]建立了用于建筑物提取的开源数据集,结合U-Net和特征金字塔网络(feature pyramid network,FPN)提出了SU-Net,实现了简单的跨尺度信息聚合,从多个尺度提高了对建筑物的分析能力; Yang等[13]提出了密集注意力网络,更好地利用了不同层次的特征,有效提升了建筑物的提取效果; 赵凌虎等[14]采用MobileNetv2作为改进Deeplabv3+的主干网络,较好地平衡了提取精度与速度的矛盾; Xia等[15]基于卷积和Transformer构造了双流特征提取网络,实现了从局部和全局2方面对遥感建筑物进行提取; 郭文等 [16]构建了多源卫星建筑物提取数据库,并基于注意力增强的FPN实现自动提取; 吕少云等[17]以Unet++网络为基础,在编码器末端添加了残差结构的空洞卷积模块,具有较强的泛化能力。

虽然以上方法在遥感影像建筑物提取方面已达到了较高精度,但对于小型建筑物和复杂场景下的建筑物仍存在提取不完整,边缘粗糙等问题。针对上述问题,本文提出了一种基于注意力机制和Deeplabv3+的建筑物提取网络,结合通道注意力模块和空间注意力模块对特征图进行多尺度处理,实现对建筑物分割精度的有效提升。

1 研究方法

1.1 特征提取主干网络

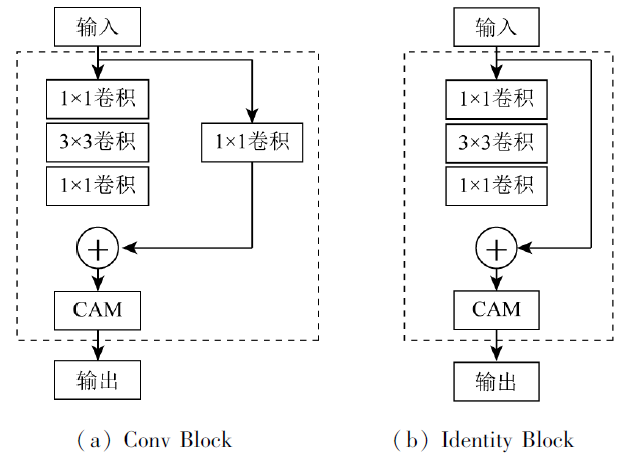

本文将Deeplabv3+原网络中的Xception[18]特征提取网络替换为Resnet50网络,并在Resnet50网络上添加通道注意力模块,解决因Xception网络结构较深而导致产生大量无效通道及特征图部分细节信息丢失的问题。Resnet50网络在传统的卷积神经网络(convolutional neural network,CNN)的基础上引入批归一化层(batch normalization,BN),弃用Dropout,同时引入2个基本的残差模块(Residual),名称分别为Conv Block和Identity Block,解决梯度消失或爆炸问题。改进后的注意力残差网络在Resnet50原有结构上的每一个残差模块后都添加了一个通道注意力模块(channel attention module,CAM),过滤无效的通道信息,如图1所示。其中Conv Block针对输入和输出维度不同的特征图,用于改变网络的维度; Identity Block针对输入和输出相同维度的特征图,用于加深网络。本文采用分级输出的方式分别输出浅层特征图和深层特征图,具体结构如表1所示,在第二层级卷积后输出浅层特征图,在网络末端输出深层特征图。

图1

表1 CAM-Resnet50网络结构

Tab.1

| 层级名称 | 输入尺寸 | 操作模块 | 重复 次数 | 输出 通道数 |

|---|---|---|---|---|

| Conv1 | 2242×3 | 7×7卷积 | 1 | 128 |

| Conv2_x | 1122×64 | Conv Block | 1 | 256 |

| Identity Block | 2 | |||

| Conv3_x | 562×256 | Conv Block | 1 | 512 |

| Identity Block | 3 | |||

| Conv4_x | 282×512 | Conv Block | 1 | 1 024 |

| Identity Block | 5 | |||

| Conv5_x | 142×1 024 | Conv Block | 1 | 2 048 |

| Identity Block | 2 | |||

| Conv6 | 72×2 048 | 1×1卷积 | 1 | 2 048 |

1.2 注意力机制

解码器的特征综合分析能力决定了网络对图像分割的准确性,受卷积块注意力模块(convolutional block attention module,CBAM)[19]的启发,本文提出了一种CAM和空间注意力模块(spatial attention module,SAM),并添加在网络的解码器中,用以加强全局信息之间的关联,关注特征图中的有效信息,忽略或剔除无关信息。

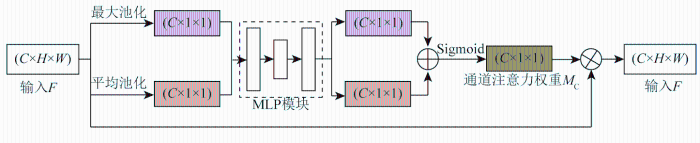

1.2.1 通道注意力

CNN通常进行多次卷积来获取更大的感受野,为了限制参数量和模型大小,没有学习特征图通道之间的关系。本文添加了一种轻量级的通道注意力模块,具体结构如图2所示,其中C,H,W分别为特征图的通道维数、高度和宽度。该模块首先对输入特征图分别进行最大池化和平均池化保持通道维度不变,实现特征图大小的压缩; 然后使用多层感知机(multilayer perceptron,MLP)模块对其通道进行缩放,将2个输出结果逐元素相加,并通过Sigmoid激活函数后得到通道注意力权重

图2

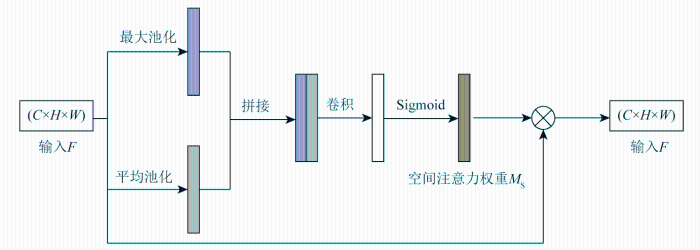

1.2.2 空间注意力

空间注意力是一种专注于目标位置信息的注意力机制,SAM模块的结构如图3所示。首先,对输入特征图沿通道维度进行最大池化和平均池化,将其拼接后进行卷积操作结合2种空间特征,然后通过Sigmoid激活函数得到最终的空间注意力权重MS。最后将权重值与输入特征图进行内积计算,赋予其相应的空间权重。

图3

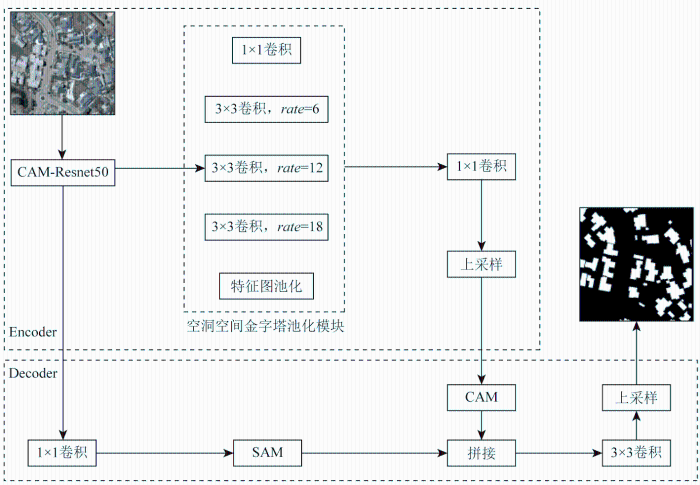

1.3 SC-deep网络

多层卷积网络的运用虽然提取了更多的高级特征,但获取的深层特征由于池化层的存在通常会丢失信息和忽略整体和部分之间的关联,导致对小目标分割不准确和边界粗糙等问题。为解决上述问题,本文提出了一种语义分割网络SC-deep,网络结构如图4所示,其中rate代表空洞卷积的采样率。该网络基于编码-解码(Encoder-Decoder)结构,主要由残差注意力网络、空洞卷积、CAM和SAM模块等组成,使用分级输出保留浅层特征,并与深层特征进行融合,其次使用空洞卷积来增大感受野,从而更好地捕捉目标物体的上下文信息,此外还引入注意力机制来加强对小目标的关注度。

图4

在编码阶段中,使用残差注意力网络(CAM-Resnet50)作为特征提取模块,该模块旨在提取遥感影像建筑物的浅层特征和深层特征。其中,浅层特征包括颜色、纹理、边缘和棱角等信息,而深层特征则是粗粒度语义等信息。为进一步提升深层特征的表达能力,在空洞空间金字塔池化(atrous spatial pyramid pooling,ASPP)模块中将不同采样率下生成的结果进行拼接,并通过卷积层和上采样调整通道维度和特征图尺寸。

在解码阶段,首先利用CAM对深层特征进行加权处理,以获取具有通道权重信息的深层特征图; 同时,浅层特征经过卷积操作后利用SAM在空间位置上进行加权,以自动捕获重要区域特征,并得到全局关联的浅层特征图; 然后将深层特征图与浅层特征图进行拼接,得到融合空间和通道注意力权重的多尺度信息聚合特征图; 最后通过卷积和上采样操作,将聚合的多尺度信息特征图恢复到原始图像大小。这种编解码结构的设计思路能够有效地提取并利用遥感影像建筑物的各种特征信息,为图像分割任务提供有力支撑。

2 实验与分析

2.1 数据集与预处理

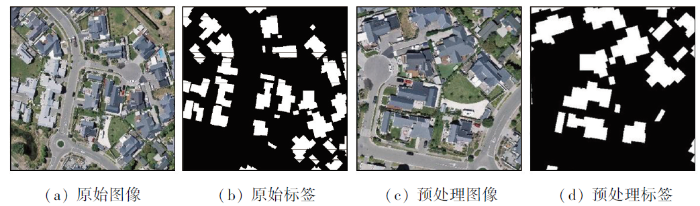

本文选取武汉大学季顺平教授及其团队提供的WHU建筑物语义分割数据集中的子数据集Aerial imagery dataset作为研究对象。该数据来自新西兰土地信息服务网站,手动编辑了基督城约22 000栋独立建筑的矢量数据,影像的空间分辨率为0.3 m。数据集中含有8 187张裁剪后大小为512像素×512像素的遥感图像,剔除无语义信息或信息较少的图像后,本文选取了6 455张图像,并以7∶1∶2的比例将其划分为训练集,验证集和测试集。为提升模型的鲁棒性,对训练数据集作随机裁剪、缩放、旋转等操作,结果如图5所示。

图5

2.2 实验环境及配置

为验证本文算法在建筑物提取方面的优越性,建立对比实验,所有实验均在统一软硬件实验环境下进行。实验环境如表2所示。

表2 实验环境配置

Tab.2

| 实验环境 | 配置参数 |

|---|---|

| CPU | Intel(R) Xeon(R) Gold 6330 |

| 内存/GB | 80 |

| GPU | RTX 3090 |

| 显存/GB | 24 |

| CUDA | CUDA11.3 |

| 学习框架 | Pytorch1.10.0 |

| 编程语言 | Python3.8 |

在SC-deep网络训练过程中,输入为大小512像素×512像素的遥感图像,设置训练样本批次大小为4,数据进程个数为4,迭代轮数为30,初始学习率为0.01,最小学习率为

2.3 评价指标

为对本文建筑物提取所用模型的性能进行定量描述,实验选取交并比(intersection over union,IoU)、召回率(Recall)、精确率(Precision)和F1分数(F1-score)4个指标作为评价指标,公式分别为:

式中:

2.4 消融实验

2.4.1 主干网络消融实验

为验证残差注意力网络作为主干网络的优越性,基于Deeplabv3+模型的基础框架,使用相同的数据集和实验环境,对主干网络的选取进行消融实验,分别使用原论文的Xception网络、轻量级mobilenetv2网络,视觉注意力vit网络和残差注意力CAM-Resnet50网络作为主干网络对Aerial imagery dataset数据集进行训练,并从IoU,Precision,Recall和F1-score这4个指标评判分割结果。消融实验结果如表3所示。

表3 主干网络消融实验结果

Tab.3

| 主干网络 | IoU | Precision | Recall | F1-score |

|---|---|---|---|---|

| Xception | 84.48 | 93.20 | 90.03 | 92.59 |

| mobilenetv2 | 83.94 | 91.33 | 91.21 | 91.27 |

| vit | 72.58 | 88.65 | 80.01 | 84.11 |

| CAM-Resnet50 | 88.75 | 94.86 | 93.23 | 94.04 |

分析表3可知,使用残差注意力网络作为主干网络可以明显提升模型性能。对比原论文Xception网络分别在IoU,Precision,Recall和F1-score方面提高了4.27,1.66,3.2和1.45百分点。此外与其他主干网络相比,残差注意力网络也展现出不同程度的性能提升,充分证明了残差注意力网络具有更强的特征提取能力。

2.4.2 注意力模块消融实验

在网络中,注意力模块的位置会导致分割精度差异。为确定2种注意力模块的最优位置达到最佳的分割效果,本文在模型中添加了不同位置的2种注意力模块,并对模型进行训练。分割结果指标如表4所示,表中Deep和Low分别表示编码器输出的深层特征图和浅层特征图。

表4 注意力模块消融实验结果

Tab.4

| 注意力模块位置 | IoU | Precision | Recall | F1-score |

|---|---|---|---|---|

| ASPP+CAM+SAM | 88.82 | 94.86 | 93.31 | 94.08 |

| ASPP+SAM+CAM | 88.52 | 95.27 | 92.59 | 93.91 |

| SAM+ASPP+CAM | 88.76 | 94.81 | 93.29 | 94.04 |

| SAM+CAM+ASPP | 86.36 | 93.52 | 91.85 | 92.68 |

| Deep+CAM,Low+SAM | 88.86 | 95.05 | 93.18 | 94.10 |

分析表4可知,由于低特征的图片具有更多的空间信息,将SAM模块放置在浅层特征之后更为合适。如果放置位置过前,就会导致概括性太大,无法捕捉一些具体特征,提取的空间注意力由于通道数较少导致概括性不足,更容易造成负面影响。因此,在使用SAM模块时需要平衡通道数和概括能力之间的关系。除了空间注意力,通道注意力也是提高模型性能的重要手段。对于高维特征图更需要通道注意力去维护图片的上下文关系,但是网络过深时,通道数过多容易引起过拟合,且特征图太小,使用卷积操作不当反而会引入大量非像素信息。对于编码器输出的深层特征,通道数量和特征图大小达到较好的平衡状态,更适合在其后添加CAM模块。

2.5 实验结果分析

表5 对比实验分割可视化结果

Tab.5

| 序号 | 真实图像 | U-Net | FCN | Deeplabv3+ | SC-deep |

|---|---|---|---|---|---|

| 1 |  | ||||

| 2 |  | ||||

| 3 |  | ||||

表6 对比实验结果

Tab.6

| 模型 | IoU | Precision | Recall | F1-score |

|---|---|---|---|---|

| U-Net | 85.13 | 92.31 | 91.62 | 91.97 |

| FCN | 87.95 | 93.66 | 93.52 | 93.59 |

| Deeplabv3+ | 84.48 | 93.20 | 90.03 | 92.59 |

| CS-deep | 88.86 | 95.05 | 93.18 | 94.10 |

根据表5显示,针对背景简单的大型建筑物,主流网络和本文网络都展现了较为精准的提取能力。然而,在提取小型建筑物时,主流网络会出现提取不完全和漏检现象。以第1行影像为例,在所有主流网络中都存在着提取不完整,建筑物边界不清晰的情况。而在处理更小型建筑物时(如第2行影像),FCN网络和Deeplabv3+网络都出现了漏检的现象。此外,当提取复杂环境下的遥感影像建筑物(如第3行影像)时,U-Net网络受到树木阴影的影响,导致建筑物顶部信息提取不完全; FCN网络和Deeplabv3+网络则存在细节边界分割不清晰的问题。相比之下,本文方法的提取结果更接近真实值,对于受到各种因素影响的建筑物提取更加准确,并且在处理小型建筑物和复杂场景的细节部分也能得到更精确的分割结果。

由表6可知,相较其他3种主流分割网络,本文方法在建筑物提取方面的精度得到进一步提高。与U-Net网络相比,SC-deep网络的提取结果的IoU,Precision,Recall和F1-score分别提升了3.73,2.74,1.56和2.13百分点。与FCN网络相比,在Recall基本保持一致的情况下,其他3个指标均有不同程度的提升。与Deeplabv3+相比,IoU,Precision,Recall和F1-score分别提升了4.38,1.85, 3.15和1.51百分点,这说明本文对Deeplabv3+网络的改进对建筑物提取性能产生了积极的影响。

综上所述,通过对比评价指标及实验分割结果的分析,可以得出以下结论: 本文提出的SC-deep网络在Aerial imagery dataset数据集上展现出更优秀的分割性能。尤其在小型建筑物的分割方面,其精准度更高。此外,在处理复杂场景下的遥感影像时,该网络也呈现出较为清晰的分割效果。

3 结论

建筑物是遥感影像反映地理信息的重要地物目标,利用遥感影像提取建筑物对地表覆盖分类、城市规划、地理信息数据库更新等具有重要意义。本文提出了基于混合注意力机制和Deeplabv3+的遥感影像建筑物提取网络SC-deep,在编码阶段引入残差注意力网络,利用ASPP模块增大感受野,在解码阶段引入CAM和SAM模块,从多尺度综合分析建筑物特征,有效地利用了高分辨率遥感影像的多尺度信息。

实验结果表明,本文SC-deep网络总体性能均优于其他主流网络,能够有效增强小型建筑物的提取效果,改善复杂场景下的遥感影像建筑物的细节提取。

值得注意的是,尽管本文网络能够对细小目标进行有效提取,但由于其像素比例小、可利用特征少等特点,仍存在边缘不清晰等问题,后续的研究将集中在提高细小目标提取精度上。

参考文献

结合多路径的高分辨率遥感影像建筑物提取SER-UNet算法

[J].

DOI:10.11947/j.AGCS.2023.20210691

[本文引用: 1]

针对深层卷积较难兼顾全局特征与局部特征从而导致提取建筑物边缘不准确和微小建筑物丢失的问题,以注意力机制和跳跃连接为基础提出SER-UNet算法。SER-UNet算法在编码器阶段耦合SE-ResNet和最大池化层,在解码器阶段关联SE-ResNet与反卷积层,通过跳跃连接将编码器提取的浅层特征和解码器提取的深层特征进行融合后输出特征图。验证SER-UNet算法的有效性,在MAP-Net网络并行多路径特征提取阶段使用SER-UNet算法替换原网络中的特征提取结构,分别在WHU数据集和Inria数据集上进行评估,IoU与精度分别达91.46%、82.61%和95.67%、92.75%,对比UNet、PSPNet、ResNet101、MAP-Net网络,IoU分别提高0.49%、0.14%、1.89%、1.57%,精度分别提高0.14%、1.06%、2.42%、1.09%。分析SER-UNet算法的泛化能力,将级联SER-UNet的MAP-Net网络在AerialImage数据集上进行提取验证,IoU与精度分别达85.32%和94.13%。结果表明,结合SER-UNet算法的MAP-Net并行多路径网络表现出较好的提取精度与泛化能力。此外,SER-UNet算法可以有效地嵌入PSPNet、ResNet101、HRNetv2等网络中,提升网络特征表示能力。

SER-UNet algorithm for building extraction from high-resolution remote sensing image combined with multipath

[J].

DOI:10.11947/j.AGCS.2023.20210691

[本文引用: 1]

Aiming at the problems of inaccurate edges and loss of small buildings in the extracted buildings due to the inability of deep convolution to take into account global features and local features, the SER-UNet algorithm is proposed based on attention mechanism and skip connection. SER-UNet algorithm couples SE_ResNet and max pooling layers in the encoder stage, and the SE_ResNet structure and deconvolution are used in the decoder stage. The feature map is output after fusing the shallow features extracted by the encoder and the deep features extracted by the decoder through skip connections. In order to analyze the effectiveness of the method, the SER-UNet is used to replace the feature extraction structure in the original network in the parallel multi-path feature extraction stage of the MAP-Net network. Finally, the method proposed is experimentally evaluated on the WHU dataset and the Inria dataset, and the IoU and precision reach 91.46%, 82.61% and 95.67%, 92.75%, compared with UNet, PSPNet, ResNet101, and MAP-Net Networks, the IoU is increased by 0.49%, 0.14%, 1.89%, and 1.57%, and the precision is increased by 0.14%, 1.06%, 2.42% and 1.09%, respectively. To further analyze the validity of the SER-UNet algorithm, the edge integrity and small extraction verification IoU and precision reached 85.32% and 94.13% on the AerialImage dataset. The experiment results show that the MAP-Net parallel multipath network combined with SER-UNet algorithm shows good generalization ability. In addition, the SER-UNet algorithm can be effectively embedded in PSPNet, ResNet101, HRNetv2 and other Networks to improve the ability of Network feature representation.

光谱和形状特征相结合的高分辨率遥感图像的建筑物提取方法

[J].

Building extraction from high resolution remote sensing imagery based on spatial-spectral method

[J].

融合颜色和纹理特征的彩色图像分割

[J].

Color image segmentation by integrating color and texture features

[J].

Pattern matching for building feature extraction

[J].

Photogrammetry and deep learning

[J].

DOI:10.11947/j.JGGS.2018.0101

[本文引用: 1]

Deep learning has become popular and the mainstream technology in many researches related to learning, and has shown its impact on photogrammetry. According to the definition of photogrammetry, that is, a subject that researches shapes, locations, sizes, characteristics and inter-relationships of real objects from optical images, photogrammetry considers two aspects, geometry and semantics. From the two aspects, we review the history of deep learning and discuss its current applications on photogrammetry, and forecast the future development of photogrammetry. In geometry, the deep convolutional neural network (CNN) has been widely applied in stereo matching, SLAM and 3D reconstruction, and has made some effects but needs more improvement. In semantics, conventional methods that have to design empirical and handcrafted features have failed to extract the semantic information accurately and failed to produce types of “semantic thematic map” as 4D productions (DEM, DOM, DLG, DRG) of photogrammetry. This causes the semantic part of photogrammetry be ignored for a long time. The powerful generalization capacity, ability to fit any functions and stability under types of situations of deep leaning is making the automatic production of thematic maps possible. We review the achievements that have been obtained in road network extraction, building detection and crop classification, etc., and forecast that producing high-accuracy semantic thematic maps directly from optical images will become reality and these maps will become a type of standard products of photogrammetry. At last, we introduce our two current researches related to geometry and semantics respectively. One is stereo matching of aerial images based on deep learning and transfer learning; the other is precise crop classification from satellite spatio-temporal images based on 3D CNN.

Fully convolutional networks for semantic segmentation

[J].

DOI:10.1109/TPAMI.2016.2572683

PMID:27244717

[本文引用: 1]

Convolutional networks are powerful visual models that yield hierarchies of features. We show that convolutional networks by themselves, trained end-to-end, pixels-to-pixels, improve on the previous best result in semantic segmentation. Our key insight is to build "fully convolutional" networks that take input of arbitrary size and produce correspondingly-sized output with efficient inference and learning. We define and detail the space of fully convolutional networks, explain their application to spatially dense prediction tasks, and draw connections to prior models. We adapt contemporary classification networks (AlexNet, the VGG net, and GoogLeNet) into fully convolutional networks and transfer their learned representations by fine-tuning to the segmentation task. We then define a skip architecture that combines semantic information from a deep, coarse layer with appearance information from a shallow, fine layer to produce accurate and detailed segmentations. Our fully convolutional networks achieve improved segmentation of PASCAL VOC (30% relative improvement to 67.2% mean IU on 2012), NYUDv2, SIFT Flow, and PASCAL-Context, while inference takes one tenth of a second for a typical image.

U-net:Convolutional networks for biomedical image segmentation

[C]//

Unet++:A nested U-Net architecture for medical image segmentation

[EB/OL].(

SegNet:A deep convolutional encoder-decoder architecture for image segmentation

[J].

DOI:10.1109/TPAMI.2016.2644615

PMID:28060704

[本文引用: 1]

We present a novel and practical deep fully convolutional neural network architecture for semantic pixel-wise segmentation termed SegNet. This core trainable segmentation engine consists of an encoder network, a corresponding decoder network followed by a pixel-wise classification layer. The architecture of the encoder network is topologically identical to the 13 convolutional layers in the VGG16 network [1]. The role of the decoder network is to map the low resolution encoder feature maps to full input resolution feature maps for pixel-wise classification. The novelty of SegNet lies is in the manner in which the decoder upsamples its lower resolution input feature map(s). Specifically, the decoder uses pooling indices computed in the max-pooling step of the corresponding encoder to perform non-linear upsampling. This eliminates the need for learning to upsample. The upsampled maps are sparse and are then convolved with trainable filters to produce dense feature maps. We compare our proposed architecture with the widely adopted FCN [2] and also with the well known DeepLab-LargeFOV [3], DeconvNet [4] architectures. This comparison reveals the memory versus accuracy trade-off involved in achieving good segmentation performance. SegNet was primarily motivated by scene understanding applications. Hence, it is designed to be efficient both in terms of memory and computational time during inference. It is also significantly smaller in the number of trainable parameters than other competing architectures and can be trained end-to-end using stochastic gradient descent. We also performed a controlled benchmark of SegNet and other architectures on both road scenes and SUN RGB-D indoor scene segmentation tasks. These quantitative assessments show that SegNet provides good performance with competitive inference time and most efficient inference memory-wise as compared to other architectures. We also provide a Caffe implementation of SegNet and a web demo at http://mi.eng.cam.ac.uk/projects/segnet.

Pyramid scene parsing network

[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

DeepLab:Semantic image segmentation with deep convolutional nets,atrous convolution,and fully connected CRFs

[J].

遥感影像建筑物提取的卷积神经元网络与开源数据集方法

[J].

DOI:10.11947/j.AGCS.2019.20180206

[本文引用: 1]

从遥感图像中自动化地检测和提取建筑物在城市规划、人口估计、地形图制作和更新等应用中具有极为重要的意义。本文提出和展示了建筑物提取的数个研究进展。由于遥感成像机理、建筑物自身、背景环境的复杂性,传统的经验设计特征的方法一直未能实现自动化,建筑物提取成为30余年尚未解决的挑战。先进的深度学习方法带来新的机遇,但目前存在两个困境:①尚缺少高精度的建筑物数据库,而数据是深度学习必不可少的“燃料”;②目前国际上的方法都采用像素级的语义分割,目标级、矢量级的提取工作亟待开展。针对于此,本文进行以下工作:①与目前同类数据集相比,建立了一套目前国际上范围最大、精度最高、涵盖多种样本形式(栅格、矢量)、多类数据源(航空、卫星)的建筑物数据库(WHU building dataset),并实现开源;②提出一种基于全卷积网络的建筑物语义分割方法,与当前国际上的最新算法相比达到了领先水平;③将建筑物提取的范围从像素级的语义分割推广至目标实例分割,实现以目标(建筑物)为对象的识别和提取。通过试验,验证了WHU数据库在国际上的领先性和本文方法的先进性。

Building extraction via convolutional neural networks from an open remote sensing building dataset

[J].

DOI:10.11947/j.AGCS.2019.20180206

[本文引用: 1]

Automatic extraction of buildings from remote sensing images is significant to city planning, popular estimation, map making and updating.We report several important developments in building extraction. Automatic building recognition from remote sensing data has been a scientific challenge of more than 30 years. Traditional methods based on empirical feature design can hardly realize automation. Advanced deep learning based methods show prospects but have two limitations now. Firstly, large and accurate building datasets are lacking while such dataset is the necessary fuel for deep learning. Secondly, the current researches only concern building's pixel wise semantic segmentation and the further extractions on instance-level and vector-level are urgently required. This paper proposes several solutions. First, we create a large, high-resolution, accurate and open-source building dataset, which consists of aerial and satellite images with both raster and vector labels. Second,we propose a novel structure based on fully neural network which achieved the best accuracy of semantic segmentation compared to most recent studies. Third, we propose a building instance segmentation method which expands the current studies of pixel-level segmentation to building-level segmentation. Experiments proved our dataset's superiority in accuracy and multi-usage and our methods' advancement. It is expected that our researches might push forward the challenging building extraction study.

Building extraction in very high resolution imagery by dense-attention networks

[J].

改进Deeplabv3+的高分辨率遥感影像道路提取模型

[J].

An information extraction model of roads from high-resolution remote sensing images based on improved Deeplabv3+

[J].

Dual-stream feature extraction network based on CNN and transformer for building extraction

[J].

基于注意力增强全卷积神经网络的高分卫星影像建筑物提取

[J].

Building extraction using high-resolution satellite imagery based on an attention enhanced full convolution neural network

[J].

Res_ASPP_UNet++:结合分离卷积与空洞金字塔的遥感影像建筑物提取网络

[J].

Res_ASPP_UNet++:Building an extraction network from remote sensing imagery combining depthwise separable convolution with atrous spatial pyramid pooling

[J].

Cbam:Convolutional block attention module

[C]//