0 引言

现已提出许多空谱图像融合的方法,并有学者在综述中针对空谱图像融合算法进行了归纳整理。张良培等[9]和李树涛等[10]将空谱图像融合分为全色-多光谱融合、全色-高光谱融合和多光谱-高光谱融合3个方面进行概括与分析; 张立福等[11]在对现有的遥感图像融合研究成果的调研中,将应用于空谱图像融合的基于空间维提升的算法和基于光谱维提升的算法进行了归纳与总结; Meng等[12]基于元分析的思想,对2000—2016年间提出的不同类别的空谱图像融合方法的性能进行了评价,并阐述了空谱图像融合的发展过程; Javan等[13]介绍了41种空谱图像融合方法,将其分为成分替换法、多尺度分解法、基于变分优化法和混合方法4类,分析了各类方法的融合性能; Vivone等[14]研究了不同类别最先进的空谱图像融合算法,将经典的空谱图像融合算法与第三代空谱图像融合算法进行了比较,并为空谱图像融合算法的定量评估提供了MATLAB工具箱。

上述综述主要是对传统空谱图像融合方法性能的比较和优缺点的分析,如成分替换法的实现较为简单,但是融合的图像质量欠佳,光谱扭曲较严重。多尺度分解法在光谱保持方面优于成分替换法,但是空间结构还欠佳。近年来,随着深度学习理论的发展,学者们对基于深度学习的遥感图像融合进行了大量的研究。Huang等[15]所提出的基于深度神经网络的遥感图像融合算法; Masi等[16]所提出的简单有效的基于三层网络的遥感图像融合方法(pansharpening by convolutional neural networks,PNN)都是较早利用深度学习实现空谱图像融合的方法。相比于传统的空谱图像融合方法,基于深度学习的方法无论是光谱保持还是空间细节锐化程度都有较大提高。虽然已提出了许多基于深度学习的空谱图像融合方法,但缺乏对这些方法的全面、系统的综合分析。因此,本文将现有的基于深度学习的空谱图像融合方法分为监督学习、半监督学习和无监督学习3大类进行了归纳,并阐述了各类方法的优点以及所存在的一些问题。最后,对基于深度学习的空谱图像融合的发展趋势和未来可能的研究方向进行了展望。

1 常用遥感卫星

遥感图像融合所需数据集由多种遥感卫星提供的全色图像和多光谱图像组成。常用的卫星包括国内的高分系列,国外的GeoEye1,IKONOS和WorldView系列等。全色图像的空间分辨率通常是多光谱图像的4倍,能够提供丰富的空间信息。多种遥感卫星不仅为图像融合技术提供了必要的数据支持,并且在工业和农业等方面都发挥了巨大价值。表1给出了常用卫星的参数信息。

表1 常用卫星基本参数

Tab.1

| 卫星名称及图像 | 波段数 | 空间分辨率/m | 光谱范围/nm | 重访周期/d | |

|---|---|---|---|---|---|

| GaoFen-1 | PAN | 1 | 2 | 450~900 | 4 |

| MS | 4 | 8 | 蓝光: 450~520,绿光: 520~590, 红光: 630~690,近红外: 770~890 | ||

| GaoFen-2 | PAN | 1 | 1 | 450~900 | 5 |

| MS | 4 | 4 | 蓝光: 450~520,绿光: 520~590, 红光: 630~690,近红外: 770~890 | ||

| GeoEye-1 | PAN | 1 | 0.46 | 450~800 | 3 |

| MS | 4 | 1.84 | 蓝光: 450~510,绿光: 510~580, 红光: 655~690,近红外: 780~920 | ||

| IKONOS | PAN | 1 | 1 | 526~929 | 3 |

| MS | 4 | 4 | 蓝光: 445~516,绿光: 506~595, 红光: 632~698,近红外: 757~853 | ||

| QuickBird | PAN | 1 | 0.61 | 405~1 053 | 1~6 |

| MS | 4 | 2.44 | 蓝光: 430~545,绿光: 466~620, 红光: 590~710,近红外: 715~918 | ||

| WorldView-2 | PAN | 1 | 0.5 | 450~800 | 1.1 |

| MS | 8 | 2 | 蓝光: 450~510,绿光: 510~580, 红光: 630~690,近红外: 770~895, 海岸: 400~450,黄色: 585~625, 红色边缘: 705~745,近红外2: 860~1 040 | ||

| WorldView-3 | PAN | 1 | 0.31 | 450~800 | 1 |

| MS | 8 | 1.24 | 蓝光: 445~517,绿光: 507~586, 红光: 626~696,近红外: 765~899, 海岸: 397~454,黄色: 580~629, 红色边缘: 698~749,近红外2: 857~1 039 | ||

2 传统空谱遥感图像融合算法

2.1 成分替换法

经典成分替换法一般是全局的,它在整个图像上以相同的方式运行。成分替换法快速简单,由于用全色图像直接替换其中一个分量,而全色图像包含丰富的细节,所以这类方法融合图像的空间细节通常较好。但是成分替换法只适用于全色图像与多光谱图像之间高度相关的情况,否则容易因图像之间光谱不匹配而产生局部差异,从而引起显著的光谱失真。为了克服这个问题,学者们提出了一些改进方法。如Garzelli等[20]提出了一种波段相互依赖的细节注入模型(band dependent spatial-detail,BDSD),在4波段的多光谱图像融合处理上有较好的性能。

2.2 多尺度分解法

基于多尺度分解的图像融合方法主要包含3步: 首先,对多源图像进行多尺度分解; 然后,融合不同源图像的分解系数; 最后,在融合系数上进行多尺度逆变换得到融合图像。其中,多尺度分解将原始图像分解成多个不同尺度的高频和低频系数,系数融合是根据不同的融合规则对不同尺度分解层上的高频与低频系数分别进行融合。常用的多尺度分解方法包括拉普拉斯金字塔[21]、广义拉普拉斯金字塔(generalized laplacian pyramid,GLP)[22]、离散小波变换[23]、Curvelet变换[24]、非下采样轮廓波变换[25]和非下采样Shearlet变换[26-27]等。与成分替换法相比,多尺度分解方法不仅注入了高频空间细节,而且还较好地保持了融合图像的光谱信息,从而解决一定的光谱失真问题,但是相应地会带来空间信息丢失、造成振铃现象等问题,因此在融合图像的结构细节方面还需加强。

2.3 稀疏表示法

3 基于深度学习的空谱遥感图像融合

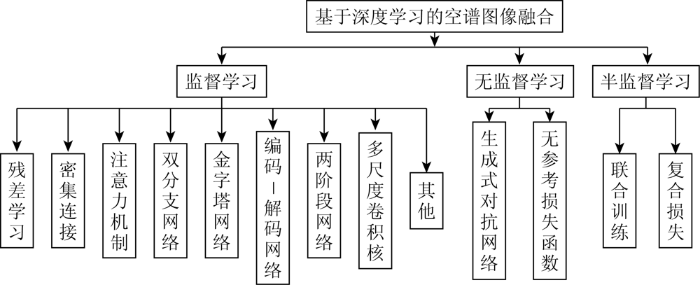

传统的空谱遥感图像融合方法虽然已取得了一些成果,但是仍存在较多问题,具有一定的局限性。与传统的空谱遥感图像融合方法不同,基于深度学习的空谱遥感图像融合直接将原始数据输入到网络中进行训练与学习,避免了人工提取信息过程中所产生的误差,使得其性能明显提高。本文将基于深度学习的空谱遥感图像融合方法分为监督学习、无监督学习和半监督学习3大类进行分析与研究,如图1所示。

图1

图1

基于深度学习的空谱遥感图像融合方法分类

Fig.1

Classification of pansharpening based on deep learning

3.1 监督学习空谱遥感图像融合

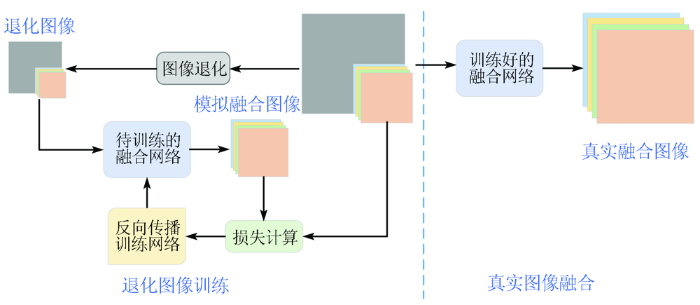

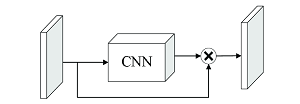

目前,大部分基于深度学习的空谱图像融合方法都采用监督学习的方式。监督学习的空谱遥感图像融合过程如图2所示。由于缺乏高空间分辨率的多光谱图像,先在退化的低空间分辨率图像和多光谱图像上,通过反向传播训练网络模型,然后再用训练好的模型融合原始分辨率的图像,得到最终的高空间分辨率的多光谱图像。

图2

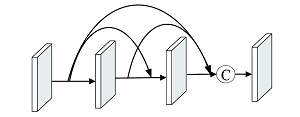

目前大部分的深度学习空谱遥感图像融合方法都是基于监督学习,针对不同方法的特性,本文将其分为9大类: 基于残差学习的方法、基于密集连接的方法、基于注意力机制的方法、基于双分支网络结构的方法、基于金字塔网络的方法、基于编码-解码网络的方法、基于两阶段网络的方法、基于多尺度卷积核的方法和其他方法。表2从各类方法的特点和主要网络结构进行了归纳总结。

表2 各类监督学习空谱图像融合算法比较

Tab.2

| 方法类别 | 主要网络结构 | 特点 |

|---|---|---|

| 残差学习方法 |  | 采用残差连接,提高了信息的流通,避免了由于网络过深所引起的梯度消失问题和退化问题 |

| 密集连接方法 |  | 密集网络作为基础网络,采用密集连接来加强信息的传递 |

| 注意力机制方法 |  | 采用注意力机制自适应调节重要信息,提高特征提取的准确率 |

| 双分支网络方法 |  | 采用2个分支分别提取全色图像和多光谱图像的特征,然后利用融合网络融合所提取的特征 |

| 金字塔网络方法 |  | 利用金字塔网络对输入图像从低空间分辨率到高空间分辨率(或从高空间分辨率到低空间分辨率)提取不同尺度特征 |

| 编码-解码网络方法 |  | 由编码器和解码器构成的对称网络,编码器提取不同尺度特征,解码器还原各尺度信息 |

| 两阶段网络方法 |  | 2个阶段都有着各自任务,发挥不同作用 |

| 多尺度卷积核方法 |  | 采用多个大小不同的卷积核对图像分别进行特征提取以获得不同感受野的特征信息 |

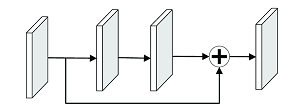

3.1.1 残差学习融合方法

基于残差学习的方法在网络中加入残差连接,使上一个残差块的信息能很好地流入到下一个残差块,提高了信息的流通,避免了由于网络过深所引起的梯度消失和退化问题。

残差学习方法缓解了深度网络中的梯度消失问题,使得能够设计和训练深度神经网络,从而通过增加网络深度来提高融合性能。但当模型深度达到一定程度以后,再增加深度能带来的性能增益较少,并且过深的网络具有较大的计算量和参数量,模型的训练时间更长。

3.1.2 密集连接融合方法

随着网络结构的加深,容易造成信息的流失,为了将信息传递给后面的所有层,基于密集连接的方法将所有层密集连接起来,保证了网络中各层之间最大信息的传递。

与残差学习通过求和来与前面的特征进行融合不同,基于密集连接的网络模型通过串联能够重用前面所有层的特征,进行进一步的特征提取,从而改善特征信息和梯度的流通。由于密集连接网络的特殊结构,宽度不会设置很大,因此需要通过增加层数来保证性能,但过多的层数会使特征图的通道数线性增加,使显存消耗爆炸式增长。

3.1.3 注意力机制融合方法

基于注意力的方法能够灵活地利用图像不同区域、不同通道甚至不同像素点之间的相似性,自适应地根据重要程度调整特征信息,增强学习能力。但通道注意力将通道内的信息直接进行全局处理,忽略了空间信息交互; 而空间注意力对每个通道进行同样的处理,忽略了通道间的信息交互。非局部注意力虽然能够捕获全局信息,但其计算量大,且对显存的要求高,为了降低计算量而刻意降低通道维度会使性能下降。

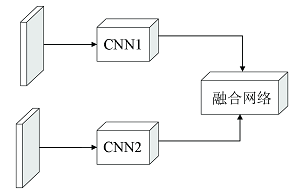

3.1.4 双分支网络融合方法

基于双分支网络结构的方法采用2个分支分别提取全色图像和多光谱图像的特征,然后融合所提取的特征重建融合图像。

Shao等[44]和Liu等[45]分别提出的融合网络RSIFNN(remote sensing image fusion with deep convolutional neural network)和TFNet( two-stream fusion network)都采用2个分支网络来分别捕捉多光谱图像和全色图像的显著特征; Fu等[46]在双分支网络中加入反馈连接来充分利用深度特征的强大表达能力,提出具有反馈连接的双路径网络,可以携带强大的深度特征,并以反馈的方式对浅层特征进行精化。上述方法设计2个网络结构相同的分支来分别提取全色图像和多光谱图像的特征。有些学者采用不同的网络结构对全色图像和多光谱图像进行处理。He等[47]提出的光谱感知卷积神经网络中构建了一个双分支结构,包括细节分支和近似分支,该网络采用二维卷积来提取全色图像细节,采用三维卷积来提取光谱信息,减少了二维卷积神经网络模型导致的光谱失真。

由于空谱图像融合需要将信息差别很大的多光谱图像和全色图像同时作为输入,因此双分支网络能够彼此独立地将多光谱图像和全色图像的特征进行提取,但普遍认为多光谱图像中存在空间信息,全色图像中也存在光谱信息,因此双分支网络虽然能够分别提取信息,但忽略了输入图像之间的互补性,导致特征信息提取可能不充分,重建后的融合图像仍可能有空间失真或光谱失真的情况。

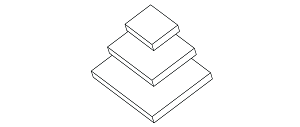

3.1.5 金字塔网络融合方法

金字塔是一种典型的多尺度分解方法,广泛应用于图像融合。学者们将金字塔的思想引入到卷积神经网络中构建金字塔网络,一般通过逐层上采样或逐层下采样来实现金字塔的效果。

金字塔网络融合方法过程中会经过多次上、下采样,通过逐层采样能够提取不同尺度下的特征信息,在一定程度上使感受野增大,特征信息的提取更加充分,并且较之一直在同一尺度上训练,金字塔融合方法能够有效减小计算量和参数量。但是任何上采样或下采样的方法都会导致信息的丢失,产生负面影响,甚至采样方法不适当的话,容易造成失真。

3.1.6 编码-解码网络融合方法

编码-解码网络是一种由编码器和解码器构成的对称网络,利用编码器进行特征提取,解码器将提取的特征进一步优化和处理。U-Net结构是一种典型的编码-解码网络[51]。

大多数基于U-Net的编码-解码融合网络都需要多级缩放,编码器提取特征中容易丢失边界信息,虽然会利用跳跃连接来保留原始光谱和空间信息,难以直接通过解码器进行恢复和重建,可能造成空间失真。

3.1.7 两阶段网络融合方法

两阶段网络融合方法首先在第一阶段利用如细节注入、超分辨率等方法对全色图像或多光谱图像进行第一步优化; 然后,在第二阶段进行信息整合,完成融合和重建,这样能够分层次多阶段的提取空间和光谱信息。

虽然基于两阶段的网络在训练上也是端到端的,但是却人为的分成了2个阶段,而第一阶段的设计需要较强的经验和领域知识,如超分时,需要有基于超分的先验知识来进行处理。

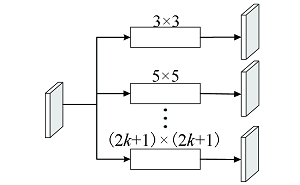

3.1.8 多尺度卷积核融合方法

多尺度卷积核特征提取是深度学习网络中常见的一种方法,采用多个不同大小的卷积核对图像分别进行特征提取,以获得不同范围的特征信息。除了利用不同大小的卷积核来实现多尺度卷积核外,空洞卷积也可以在不增加参数负担的情况下有效地增加感受野。

Li等[59]设计了一个多尺度特征提取块来提取全色图像中的空间特征,用不同大小的卷积核对全色图像进行特征提取; Peng等[39]和Yuan等[60]都在多尺度卷积核特征提取模块中加入了残差连接,残差连接可以使变换后的特征图与原始提取的特征保持一致,有效地避免部分失真。上述方法中的卷积核在训练后固定不变,而图像每个位置的空间细节不同,用同样的滤波器对不同的空间位置进行滤波,很难得到满意的细节图像,难以达到良好的锐化效果。Hu等[61]提出一种多尺度动态卷积神经网络(multiscale dynamic convolutional neural network,MDCNN),根据输入自适应生成不同大小的卷积核与全色图像进行动态卷积,提取不同尺度的特征,有效提高了网络的融合性能。

不同卷积核具有不同大小的感受野,因此利用多尺度卷积核能够整合不同感受野的信息,但是大卷积核相当于多层小卷积核,计算量和参数量会明显增加。利用空洞卷积实现多尺度卷积虽然不会增加额外的计算量和参数量,但是空洞卷积对图像中较小物体的特征提取困难,且可能由于空洞丢失空间连续信息。空洞卷积增大了感受野,但过大范围的信息可能不相关。

3.1.9 其他方法

除上述方法外,学者们还提出了一些其他的基于深度学习的空谱图像融合方法。Hu等[64]提出了一种用于自适应图像全色锐化的深度自学习网络,和一种点扩展函数估计算法来获取多光谱图像的模糊核,并设计了一种基于边缘检测的像素匹配方法来恢复图像之间所产生的局部误配准。

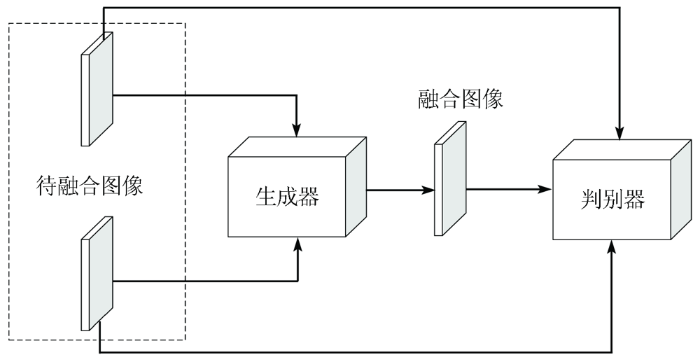

3.2 无监督学习空谱遥感图像融合

监督学习方法训练过程都需要参考图像,而实际中,参考的高分辨率多光谱图像不存在。为了克制用退化图像训练模型,再用训练好的模型融合原始图像,而退化图像与原始图像的分辨率和尺度不一致,导致退化图像融合过程不能完全反映原始图像融合的问题。学者们提出了直接在原始图像上训练的无监督学习遥感图像融合方法,主要包括生成式对抗网络和无参考损失函数2大类。

图3

3.3 半监督学习空谱遥感图像融合

无监督方法由于缺乏高分多光谱图像作为监督信号,导致其性能受限。为了克服该问题,学者们结合监督学习和无监督学习,提出了半监督学习融合方法,主要包括退化图像与原始图像联合训练和复合损失函数2大类。

4 损失函数

损失函数(也称为代价函数)表示网络模型的预测值和真实值之间的差异,对模型的性能具有重要的影响。下面使用y来表示真实值,即参考图像;

4.1 空间损失

均方差损失(mean squared error,MSE)也称为L2损失,是深度学习进行图像重建常用的损失函数,也广泛应用于遥感图像融合。计算预测值与真实值之间差值的平方的平均值LMSE,即

式中i和j分别为空间坐标。MSE损失虽然简单常用,但可能会使得重建图像较模糊或平滑。

平均绝对误差损失(mean absolute error,MAE)也是一种常用的损失函数,也称为L1损失,是预测值与真实值之间差值的绝对值的平均,即是绝对误差的平均值LMAE,即

式中:

为了减少全色图像与多光谱图像之间误配准的影响,Choi等[79]提出了一种空间结构损失函数,称为S3空间损失,该损失基于融合图像与全色图像之间的梯度差异,并且考虑了参考多光谱图像与全色图像之间的相关性,从而实现融合图像空间结构增强,减少如伪影等异常空间部分。该方法中梯度grad(X)、相关性S和空间损失

式中: m为均值滤波器;

4.2 光谱损失

多光谱图像具有多个光谱通道,而空间损失是基于逐点的损失,不能反映光谱向量之间的关系。为此,学者们提出了一些光谱损失函数。

受评价指标光谱角映射(spectral angle mapper,SAM)的启发,Hu等[57]提出了一种基于光谱向量相关性的损失函数LSAM,通过计算融合图像和真实图像之间向量的余弦得到光谱相似性,量化图像之间的光谱相似程度,计算公式为:

式中:

Eghbalian等[80]也提出了一种光谱损失函数。该损失函数不光从2个向量之间的角度出发,并且利用了2个向量之间的大小的差异。其损失函数L计算公式为:

式中:

Xu等[78]同样提出了基于参考图像和全色图像之间的相关性来约束光谱信息的光谱损失函数Lspectral,主要考虑参考光谱图像和输出图像之间高度相关的像素区域,再利用相关性与相关区域进行点乘得到需要进行优化的像素点。计算公式为:

4.3 空谱损失

为了结合空间结构特性和光谱向量相关性,学者们提出了空谱损失函数,最常见的方法是将上述空间损失与光谱损失加权求和,计算公式为:

4.4 感知损失

式中:

5 实验结果及分析

为了对比传统方法和深度学习方法中的融合性能,以及损失函数对于基于深度学习方法的重要性,在本节给出了相应的实验。实验使用的是8通道WorldView-3卫星图像,14 400幅大小为32像素×32像素的图像对用于训练,36对大小为240像素×240像素的源图像用于测试。同时在模拟图像融合上采用空间相关系数(spatial correlation coefficient,SCC)、SAM、相对整体维数综合误差(erreur relative global adimensionnelle de synthèse,ERGAS)、通用图像质量指标Q和第N波段图像融合质量评价指标QN这5项指标来对融合结果进行评估[83],在真实图像实验上则是利用QNR对融合结果进行客观定量评价[84]。

5.1 融合方法比较

选取了传统方法中的GS[18],BDSD[20]和GLP[22],深度学习方法中选取典型的PNN[16],PanNet[35],DCCNP[38],NLRNet[43],RSIFNN[44],TFNet[45],MSDCNN[60],MDCNN[61]和SDS[63]一共12种方法进行了实验对比。表3是在WorldView-3数据集上的实验结果。从表3中可以看出,相比传统方法,基于深度学习的方法在整体性能上都有很大的提升。在基于深度学习的方法中,只有简单3层卷积的PNN性能上除了SAM和SCC指标比RSIFNN略好以外,其余都较差,说明神经网络的深度对于融合性能具有重要影响。对比PNN,基于残差连接的PanNet以及基于密集连接的DCCNP都表现出更好的性能。基于双分支的RSIFNN由于其网络结构偏重对全色图像空间信息的提取,对于光谱信息的提取存在不足,因此在SAM指标上有所欠缺。而同样基于双分支的TFNet则对于多光谱图像和全色图像做同样的处理,在模拟图像实验上取得了更好的结果。说明对于多光谱图像和全色图像的信息提取同等重要。基于多尺度卷积核的MSDCNN在并没有大幅度增加网络深度的基础上,通过加宽网络宽度来增强网络的表征能力。相较于采用改变网络深度以及宽度的方法来说,基于非局部注意力机制的NLRNet具有比较明显的优势,突出了非局部注意力机制的优势。相较于其他基于静态卷积的方法,MDCNN是基于动态卷积的方法,从性能上看,多项指标优于其他方法。SDS则是基于动态网络的方法,性能更是在MDCNN的基础上进一步提高,说明无论是动态卷积还是动态网络,动态的方法能够明显提升遥感图像融合性能。

表3 图像融合算法性能比较①

Tab.3

| 算法 | Q | SAM | ERGAS | SCC | QN | QNR |

|---|---|---|---|---|---|---|

| GS | 0.816 8 | 6.711 1 | 4.580 6 | 0.827 3 | 0.820 7 | 0.867 7 |

| BDSD | 0.853 8 | 8.081 8 | 4.733 2 | 0.830 7 | 0.858 3 | 0.896 5 |

| GLP | 0.866 9 | 6.432 2 | 4.125 0 | 0.849 9 | 0.872 1 | 0.800 4 |

| PNN | 0.931 1 | 5.089 0 | 2.979 8 | 0.930 6 | 0.929 5 | 0.913 0 |

| PanNet | 0.938 2 | 4.847 9 | 2.851 7 | 0.935 1 | 0.935 9 | 0.929 0 |

| DCCNP | 0.935 1 | 5.076 3 | 2.951 5 | 0.935 8 | 0.933 6 | 0.915 2 |

| RSIFNN | 0.934 7 | 5.132 3 | 2.917 3 | 0.930 1 | 0.933 4 | 0.928 8 |

| TFNet | 0.941 6 | 4.613 7 | 2.774 3 | 0.943 1 | 0.939 9 | 0.918 6 |

| MSDCNN | 0.935 1 | 4.939 9 | 2.891 9 | 0.936 3 | 0.933 2 | 0.928 7 |

| NLRNet | 0.943 3 | 4.360 2 | 2.929 6 | 0.948 3 | 0.939 9 | 0.943 3 |

| MDCNN | 0.941 6 | 4.365 5 | 2.706 3 | 0.947 9 | 0.940 1 | 0.948 8 |

| SDS | 0.948 3 | 4.348 1 | 2.596 9 | 0.951 7 | 0.946 4 | 0.955 6 |

①加粗代表最好,下划线次之。

5.2 不同损失函数融合结果及性能分析

表4 不同损失函数性能比较①

Tab.4

| 融合网络 | 类别 | 损失函数 | Q | SAM | ERGAS | SCC | QN | QNR |

|---|---|---|---|---|---|---|---|---|

| PNN | 空间损失 | MSE | 0.931 1 | 5.089 0 | 2.979 8 | 0.930 6 | 0.929 5 | 0.913 0 |

| MAE | 0.929 1 | 5.133 6 | 3.033 7 | 0.928 7 | 0.927 6 | 0.895 6 | ||

| SSIM | 0.821 6 | 15.413 1 | 9.500 0 | 0.923 6 | 0.675 7 | 0.814 5 | ||

| MSE+SSIM | 0.938 1 | 5.131 1 | 2.984 0 | 0.931 0 | 0.934 6 | 0.932 9 | ||

| MAE+SSIM | 0.937 2 | 5.066 7 | 2.964 4 | 0.930 9 | 0.934 8 | 0.926 6 | ||

| 光谱损失 | SAM | 0.566 2 | 4.712 8 | 8.928 9 | 0.503 4 | 0.539 0 | 0.641 0 | |

| 空谱损失 | MSE+SAM | 0.878 7 | 5.088 3 | 3.594 5 | 0.916 6 | 0.877 6 | 0.867 1 | |

| MAE+SAM | 0.928 1 | 5.061 6 | 3.035 3 | 0.928 3 | 0.927 1 | 0.900 7 | ||

| MSE+SAM+SSIM | 0.937 7 | 5.015 0 | 2.977 7 | 0.931 4 | 0.933 9 | 0.928 2 | ||

| MAE+SAM+SSIM | 0.934 1 | 5.146 0 | 3.020 2 | 0.925 5 | 0.931 5 | 0.923 4 |

①加粗代表最好,下划线次之。

从表4中可以看出,MSE损失函数在整体上给出了比MAE更好的性能。SAM和SSIM损失函数都明显较差,两者都不适宜单独作为网络的损失函数。单独使用时,SAM损失函数虽然比其他差,但SAM指标达到了最优,因此,SAM损失函数有利于保持光谱信息。类似地,SSIM损失函数的其他指标较差,但SCC指标较好,因此,SSIM损失函数有利于空间结构细节重建。MSE+SSIM对比MSE以及MAE+SSIM对比MAE,SCC指标都有一定的提升,真实图像实验的QNR指标提升更明显,进一步证明了SSIM损失函数能够促进空间细节的注入。

在空谱损失函数的实验中,为了简单起见,本文采取的权重系数都为1。相比MSE+SAM,MAE+SAM在性能上具有明显优势,原因可能是MSE+SAM之间直接取权重系数为1并不合适。对比MAE的结果,MAE+SAM的SAM和QNR指标也有一定的提升,表明SAM损失函数对融合网络提取光谱信息具有重要意义。

根据由3个损失函数构成的空谱损失函数的实验结果,MSE+SAM+SSIM方法比MAE+SAM+SSIM方法的性能更好,但是对比只由2个损失函数构成的空谱损失函数以及单独一个损失函数的方法,简单组合损失函数并不一定能改善网络性能,原因可能是权重系数不合适或者不同损失函数之间可能存在不适配。

根据实验结果,不同损失函数对融合结果都有影响。MSE和MAE作为最常用的损失函数,都给出了较好的融合性能,光谱损失函数SAM和空间结构相似性损失函数SSIM也分别针对光谱和空间有一定的优化作用,空谱损失函数也表现出比单独只使用一种损失函数更好的性能。

6 结论与展望

本研究首先介绍了3种传统的经典全色锐化方法,然后介绍和分析了基于深度学习的全色锐化的关键技术,其次着重综述了深度学习在空谱图像融合研究中所获得的成果,分为监督学习、无监督学习和半监督学习3大类进行梳理和总结,并介绍了目前基于深度学习的全色锐化方法中所使用的损失函数,最后对多种经典融合方法以及损失函数做了定量实验和总结分析。尽管基于深度学习的空谱图像融合取得了不错的成果,但仍有许多方向值得进一步研究与探索。

1)图像迁移。现有的空谱图像融合方法的迁移能力普遍较差,不能将在一个卫星数据集上训练的模型迁移到另一个卫星数据集上,也就是需要针对每个卫星分别训练网络,如何在多个卫星图像上同时训练网络,或如何构建一个具有迁移能力的融合模型是一个值得探索的方向。

2)轻量化网络。为了获得更好的融合效果,学者们设计的模型都具有较深的网络结构,这大大提高了模型的复杂度,增加了计算量,并且对于实验设备的要求也比较高。为了在获得更好性能的同时减少计算量,利用动态等思想设计轻量化网络来实现空谱图像融合有着重要意义。

3)神经网络结构搜索。网络结构、深度和宽度等对融合性能具有重要影响,为了获得好的融合性能,学者们往往需要大量的实验才能选择和确定性能较优的网络结构和超参数,并且一般网络模型都具有较深的结构,模型复杂度和计算量都很大。神经网络结构搜索可以实现网络空间搜索,自动生成性能最优的网络结构,可以有效地提高网络性能。因此,将神经网络结构搜索应用于空谱图像融合是一个值得研究的方向。

4)模型与数据驱动结合。目前基于卷积神经网络的融合方法都是数据驱动型,依赖大量的数据进行训练,属于黑箱算法,对其内部的融合过程不甚了解。而模型驱动型方法通过从目标、机理以及先验知识出发设计一个融合过程透明的模型,能够很好地解释模型的可信性。所以将模型与数据驱动结合进行图像融合是一个值得研究的方向。

5)面向高级与多任务的融合。融合图像通常要用于进一步的图像分类和目标检测等高级任务,目前的融合方法主要是基于重构性能设计,没有结合高级任务的特点。因此,结合高级任务设计融合方法具有较强的实际意义。此外,多任务学习能够联合多个相关任务进行学习训练,并且任务之间共享信息,利用多个任务的相关性来提升最终单个任务的性能。在遥感图像融合任务中,可以联合分类和目标检测等高级任务进行多任务学习,例如利用分类任务将遥感图像分成城市、农田等类别和目标检测任务检测桥梁、公路等获取的独特的光谱和空间信息共享到图像融合任务中,从而提高融合任务的性能和泛化能力。因此利用多任务学习来进行遥感图像融合也是一个值得深入研究的问题。

参考文献

多源空—谱遥感图像融合方法进展与挑战

[J].

Progress and challenges in the fusion of multisource spatial-spectral remote sensing images

[J].

A review of remote sensing image fusion methods

[J].DOI:10.1016/j.inffus.2016.03.003 URL [本文引用: 1]

多源图像融合方法的研究综述

[J].

A survey of fusion methods for multi-source image

[J].

干旱区Landsat8全色与多光谱数据融合算法评价

[J].

Fusion algorithm evaluation of Landsat8 panchromatic and multispetral images in arid regions

[J].

Remote sensing for agricultural applications:A meta-review

[J].DOI:10.1016/j.rse.2019.111402 URL [本文引用: 1]

多尺度特征增强的遥感图像舰船目标检测

[J].

Ship detection based on multi-scale feature enhancement of remote sensing images

[J].

遥感技术在交通气象灾害监测中的应用进展

[J].

Research progress of remote sensing application on transportation meteorological disasters

[J].

基于多分支CNN高光谱与全色影像融合处理

[J].

Pansharpening based on multi-branch CNN

[J].

遥感数据融合的进展与前瞻

[J].

Progress and future of remote sensing data fusion

[J].

多源遥感图像融合发展现状与未来展望

[J].

Development status and future prospects of multi-source remote sensing image fusion

[J].

遥感数据融合研究进展与文献定量分析(1992—2018)

[J].

Progress and bibliometric analysis of remote sensing data fusion methods (1992—2018)

[J].

Review of the pansharpening methods for remote sensing images based on the idea of meta-analysis:Practical discussion and challenges

[J].DOI:10.1016/j.inffus.2018.05.006 URL [本文引用: 1]

A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery

[J].DOI:10.1016/j.isprsjprs.2020.11.001 URL [本文引用: 1]

A new benchmark based on recent advances in multispectral pansharpening:Revisiting pansharpening with classical and emerging pansharpening methods

[J].DOI:10.1109/MGRS.6245518 URL [本文引用: 1]

A new pan-sharpening method with deep neural networks

[J].DOI:10.1109/LGRS.2014.2376034 URL [本文引用: 1]

Pansharpening by convolutional neural networks

[J].DOI:10.3390/rs8070594 URL [本文引用: 2]

Combining the spectral PCA and spatial PCA fusion methods by an optimal filter

[J].DOI:10.1016/j.inffus.2015.06.006 URL [本文引用: 1]

Nonlinear IHS:A promising method for pan-sharpening

[J].DOI:10.1109/LGRS.2016.2597271 URL [本文引用: 1]

Optimal MMSE pan sharpening of very high resolution multispectral images

[J].DOI:10.1109/TGRS.2007.907604 URL [本文引用: 2]

MTF-tailored multiscale fusion of high-resolution MS and pan imagery

[J].DOI:10.14358/PERS.72.5.591 URL [本文引用: 1]

Full scale regression-based injection coefficients for panchromatic sharpening

[J].

DOI:10.1109/TIP.2018.2819501

PMID:29671744

[本文引用: 2]

Pansharpening is usually related to the fusion of a high spatial resolution but low spectral resolution (panchromatic) image with a high spectral resolution but low spatial resolution (multispectral) image. The calculation of injection coefficients through regression is a very popular and powerful approach. These coefficients are usually estimated at reduced resolution. In this paper, the estimation of the injection coefficients at full resolution for regression-based pansharpening approaches is proposed. To this aim, an iterative algorithm is proposed and studied. Its convergence, whatever the initial guess, is demonstrated in all the practical cases and the reached asymptotic value is analytically calculated. The performance is assessed both at reduced resolution and at full resolution on four data sets acquired by the IKONOS sensor and the WorldView-3 sensor. The proposed full scale approach always shows the best performance with respect to the benchmark consisting of state-of-the-art pansharpening methods.

Indusion:Fusion of multispectral and panchromatic images using the induction scaling technique

[J].DOI:10.1109/LGRS.2007.909934 URL [本文引用: 1]

High quality multi-spectral and panchromatic image fusion technologies based on curvelet transform

[J].DOI:10.1016/j.neucom.2015.01.050 URL [本文引用: 1]

A pan-sharpening based on the non-subsampled contourlet transform:Application to WorldView-2 imagery

[J].DOI:10.1109/JSTARS.4609443 URL [本文引用: 1]

基于NSST的PCNN-SR卫星遥感图像融合方法

[J].

Satellite remote sensing image fusion method based on NSST and PCNN-SR

[J].

混沌蜂群优化的NSST域多光谱与全色图像融合

[J].

Multispectral and panchromatic image fusion using chaotic Bee Colony optimization in NSST domain

[J].

A new pan-sharpening method using a compressed sensing technique

[J].DOI:10.1109/TGRS.2010.2067219 URL [本文引用: 1]

PAN-guided cross-resolution projection for local adaptive sparse representation-based pansharpening

[J].DOI:10.1109/TGRS.36 URL [本文引用: 1]

基于字典学习的遥感影像超分辨率融合方法

[J].

Super-resolution fusion method for remote sensing image based on dictionary learning

[J].

Sparse representation based pansharpening with details injection model

[J].DOI:10.1016/j.sigpro.2014.12.017 URL [本文引用: 1]

Remote sensing image fusion via sparse representations over learned dictionaries

[J].DOI:10.1109/TGRS.2012.2230332 URL [本文引用: 1]

Target-adaptive CNN-based pansharpening

[J].DOI:10.1109/TGRS.36 URL [本文引用: 1]

Boosting the accuracy of multispectral image pansharpening by learning a deep residual network

[J].DOI:10.1109/LGRS.2017.2736020 URL [本文引用: 1]

PanNet:A deep network architecture for pan-sharpening

[C]//

GTP-PNet:A residual learning network based on gradient transformation prior for pansharpening

[J].DOI:10.1016/j.isprsjprs.2020.12.014 URL [本文引用: 2]

PCDRN:Progressive cascade deep residual network for pansharpening

[J].

DOI:10.3390/rs12040676

URL

[本文引用: 1]

Pansharpening is the process of fusing a low-resolution multispectral (LRMS) image with a high-resolution panchromatic (PAN) image. In the process of pansharpening, the LRMS image is often directly upsampled by a scale of 4, which may result in the loss of high-frequency details in the fused high-resolution multispectral (HRMS) image. To solve this problem, we put forward a novel progressive cascade deep residual network (PCDRN) with two residual subnetworks for pansharpening. The network adjusts the size of an MS image to the size of a PAN image twice and gradually fuses the LRMS image with the PAN image in a coarse-to-fine manner. To prevent an overly-smooth phenomenon and achieve high-quality fusion results, a multitask loss function is defined to train our network. Furthermore, to eliminate checkerboard artifacts in the fusion results, we employ a resize-convolution approach instead of transposed convolution for upsampling LRMS images. Experimental results on the Pléiades and WorldView-3 datasets prove that PCDRN exhibits superior performance compared to other popular pansharpening methods in terms of quantitative and visual assessments.

A new architecture of densely connected convolutional networks for pan-sharpening

[J].

DOI:10.3390/ijgi9040242

URL

[本文引用: 2]

In this paper, we propose a new architecture of densely connected convolutional networks for pan-sharpening (DCCNP). Since the traditional convolution neural network (CNN) has difficulty handling the lack of a training sample set in the field of remote sensing image fusion, it easily leads to overfitting and the vanishing gradient problem. Therefore, we employed an effective two-dense-block architecture to solve these problems. Meanwhile, to reduce the network architecture complexity, the batch normalization (BN) layer was removed in the design architecture of DenseNet. A new architecture of DenseNet for pan-sharpening, called DCCNP, is proposed, which uses a bottleneck layer and compression factors to narrow the network and reduce the network parameters, effectively suppressing overfitting. The experimental results show that the proposed method can yield a higher performance compared with other state-of-the-art pan-sharpening methods. The proposed method not only improves the spatial resolution of multi-spectral images, but also maintains the spectral information well.

PSMD-Net:A novel pan-sharpening method based on a multiscale dense network

[J].DOI:10.1109/TGRS.2020.3020162 URL [本文引用: 2]

CSAFNET:Channel similarity attention fusion network for multispectral pansharpening

[J].

基于知识引导的遥感影像融合方法

[J].

Knowledge-based remote sensing imagery fusion method

[J].

A differential information residual convolutional neural network for pansharpening

[J].DOI:10.1016/j.isprsjprs.2020.03.006 URL [本文引用: 1]

NLRNet:An efficient nonlocal attention resnet for pansharpening

[J].

Remote sensing image fusion with deep convolutional neural network

[J].DOI:10.1109/JSTARS.4609443 URL [本文引用: 2]

Remote sensing image fusion based on two-stream fusion network

[J].

Two-path network with feedback connections for pan-sharpening in remote sensing

[J].

A spectral-aware convolutional neural network for pansharpening

[J].

Pan-sharpening using an efficient bidirectional pyramid network

[J].DOI:10.1109/TGRS.36 URL [本文引用: 1]

Pan-sharpening based on parallel pyramid convolutional neural network

[C]//

基于深度金字塔网络的Pan-Sharpening算法

[J].

Pan-sharpening based on a deep pyramid network

[J].

U-Net:Convolutional networks for biomedical image segmentation

[C]//

Pixel-wise regression using U-Net and its application on pansharpening

[J].DOI:10.1016/j.neucom.2018.05.103 URL [本文引用: 1]

MSDRN:Pansharpening of multispectral images via multi-scale deep residual network

[J].

DOI:10.3390/rs13061200

URL

[本文引用: 1]

In order to acquire a high resolution multispectral (HRMS) image with the same spectral resolution as multispectral (MS) image and the same spatial resolution as panchromatic (PAN) image, pansharpening, a typical and hot image fusion topic, has been well researched. Various pansharpening methods that are based on convolutional neural networks (CNN) with different architectures have been introduced by prior works. However, different scale information of the source images is not considered by these methods, which may lead to the loss of high-frequency details in the fused image. This paper proposes a pansharpening method of MS images via multi-scale deep residual network (MSDRN). The proposed method constructs a multi-level network to make better use of the scale information of the source images. Moreover, residual learning is introduced into the network to further improve the ability of feature extraction and simplify the learning process. A series of experiments are conducted on the QuickBird and GeoEye-1 datasets. Experimental results demonstrate that the MSDRN achieves a superior or competitive fusion performance to the state-of-the-art methods in both visual evaluation and quantitative evaluation.

Real-time and effective pan-sharpening for remote sensing using multi-scale fusion network

[J].

Two stages pan-sharpening details injection approach based on very deep residual networks

[J].DOI:10.1109/TGRS.2020.3019835 URL [本文引用: 1]

Deep residual learning for image reco-gnition

[C]//

Two-stage pansharpening based on multi-level detail injection network

[J].DOI:10.1109/Access.6287639 URL [本文引用: 4]

SC-PNN:Saliency cascade convolutional neural network for pansharpening

[J].

MDECNN:A multiscale perception dense encoding convolutional neural network for multispectral pan-sharpening

[J].

DOI:10.3390/rs13030535

URL

[本文引用: 1]

With the rapid development of deep neural networks in the field of remote sensing image fusion, the pan-sharpening method based on convolutional neural networks has achieved remarkable effects. However, because remote sensing images contain complex features, existing methods cannot fully extract spatial features while maintaining spectral quality, resulting in insufficient reconstruction capabilities. To produce high-quality pan-sharpened images, a multiscale perception dense coding convolutional neural network (MDECNN) is proposed. The network is based on dual-stream input, designing multiscale blocks to separately extract the rich spatial information contained in panchromatic (PAN) images, designing feature enhancement blocks and dense coding structures to fully learn the feature mapping relationship, and proposing comprehensive loss constraint expectations. Spectral mapping is used to maintain spectral quality and obtain high-quality fused images. Experiments on different satellite datasets show that this method is superior to the existing methods in both subjective and objective evaluations.

A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening

[J].DOI:10.1109/JSTARS.4609443 URL [本文引用: 2]

Pan-sharpening via multiscale dynamic convolutional neural network

[J].DOI:10.1109/TGRS.36 URL [本文引用: 2]

Learning an efficient convolution neural network for pansharpening

[J].

DOI:10.3390/a12010016

URL

[本文引用: 1]

Pansharpening is a domain-specific task of satellite imagery processing, which aims at fusing a multispectral image with a corresponding panchromatic one to enhance the spatial resolution of multispectral image. Most existing traditional methods fuse multispectral and panchromatic images in linear manners, which greatly restrict the fusion accuracy. In this paper, we propose a highly efficient inference network to cope with pansharpening, which breaks the linear limitation of traditional methods. In the network, we adopt a dilated multilevel block coupled with a skip connection to perform local and overall compensation. By using dilated multilevel block, the proposed model can make full use of the extracted features and enlarge the receptive field without introducing extra computational burden. Experiment results reveal that our network tends to induce competitive even superior pansharpening performance compared with deeper models. As our network is shallow and trained with several techniques to prevent overfitting, our model is robust to the inconsistencies across different satellites.

Spatial dynamic selection network for remote-sensing image fusion

[J].

Deep self-learning network for adaptive pansharpening

[J].

DOI:10.3390/rs11202395

URL

[本文引用: 1]

Deep learning (DL)-based paradigms have recently made many advances in image pansharpening. However, most of the existing methods directly downscale the multispectral (MSI) and panchromatic (PAN) images with default blur kernel to construct the training set, which will lead to the deteriorative results when the real image does not obey this degradation. In this paper, a deep self-learning (DSL) network is proposed for adaptive image pansharpening. First, rather than using the fixed blur kernel, a point spread function (PSF) estimation algorithm is proposed to obtain the blur kernel of the MSI. Second, an edge-detection-based pixel-to-pixel image registration method is designed to recover the local misalignments between MSI and PAN. Third, the original data is downscaled by the estimated PSF and the pansharpening network is trained in the down-sampled domain. The high-resolution result can be finally predicted by the trained DSL network using the original MSI and PAN. Extensive experiments on three images collected by different satellites prove the superiority of our DSL technique, compared with some state-of-the-art approaches.

PWnet:An adaptive weigh network for the fusion of panchromatic and multispectral images

[J].

DOI:10.3390/rs12172804

URL

[本文引用: 1]

Pansharpening is a typical image fusion problem, which aims to produce a high resolution multispectral (HRMS) image by integrating a high spatial resolution panchromatic (PAN) image with a low spatial resolution multispectral (MS) image. Prior arts have used either component substitution (CS)-based methods or multiresolution analysis (MRA)-based methods for this propose. Although they are simple and easy to implement, they usually suffer from spatial or spectral distortions and could not fully exploit the spatial and/or spectral information existed in PAN and MS images. By considering their complementary performances and with the goal of combining their advantages, we propose a pansharpening weight network (PWNet) to adaptively average the fusion results obtained by different methods. The proposed PWNet works by learning adaptive weight maps for different CS-based and MRA-based methods through an end-to-end trainable neural network (NN). As a result, the proposed PWN inherits the data adaptability or flexibility of NN, while maintaining the advantages of traditional methods. Extensive experiments on data sets acquired by three different kinds of satellites demonstrate the superiority of the proposed PWNet and its competitiveness with the state-of-the-art methods.

Detail injection-based deep convolutional neural networks for pansharpening

[J].

Generative adversarial networks

[J].

DOI:10.1145/3422622

URL

[本文引用: 1]

\n Generative adversarial networks are a kind of artificial intelligence algorithm designed to solve the\n generative modeling\n problem. The goal of a generative model is to study a collection of training examples and learn the probability distribution that generated them. Generative Adversarial Networks (GANs) are then able to generate more examples from the estimated probability distribution. Generative models based on deep learning are common, but GANs are among the most successful generative models (especially in terms of their ability to generate realistic high-resolution images). GANs have been successfully applied to a wide variety of tasks (mostly in research settings) but continue to present unique challenges and research opportunities because they are based on game theory while most other approaches to generative modeling are based on optimization.\n

Pan-GAN:An unsupervised pan-sharpening method for remote sensing image fusion

[J].DOI:10.1016/j.inffus.2020.04.006 URL [本文引用: 2]

PercepPan:Towards unsupervised pan-sharpening based on perceptual loss

[J].

DOI:10.3390/rs12142318

URL

[本文引用: 1]

In the literature of pan-sharpening based on neural networks, high resolution multispectral images as ground-truth labels generally are unavailable. To tackle the issue, a common method is to degrade original images into a lower resolution space for supervised training under the Wald’s protocol. In this paper, we propose an unsupervised pan-sharpening framework, referred to as “perceptual pan-sharpening”. This novel method is based on auto-encoder and perceptual loss, and it does not need the degradation step for training. For performance boosting, we also suggest a novel training paradigm, called “first supervised pre-training and then unsupervised fine-tuning”, to train the unsupervised framework. Experiments on the QuickBird dataset show that the framework with different generator architectures could get comparable results with the traditional supervised counterpart, and the novel training paradigm performs better than random initialization. When generalizing to the IKONOS dataset, the unsupervised framework could still get competitive results over the supervised ones.

Pan-sharpening based on convolutional neural network by using the loss function with no-reference

[J].DOI:10.1109/JSTARS.4609443 URL [本文引用: 1]

Pansharpening via unsupervised convolutional neural networks

[J].DOI:10.1109/JSTARS.4609443 URL [本文引用: 1]

半监督卷积神经网络遥感图像融合

[J],

Semi-supervised convolutional neural network remote sensing image fusion

[J].

A detail-preserving cross-scale learning strategy for CNN-based pansharpening

[J].

DOI:10.3390/rs12030348

URL

[本文引用: 1]

The fusion of a single panchromatic (PAN) band with a lower resolution multispectral (MS) image to raise the MS resolution to that of the PAN is known as pansharpening. In the last years a paradigm shift from model-based to data-driven approaches, in particular making use of Convolutional Neural Networks (CNN), has been observed. Motivated by this research trend, in this work we introduce a cross-scale learning strategy for CNN pansharpening models. Early CNN approaches resort to a resolution downgrading process to produce suitable training samples. As a consequence, the actual performance at the target resolution of the models trained at a reduced scale is an open issue. To cope with this shortcoming we propose a more complex loss computation that involves simultaneously reduced and full resolution training samples. Our experiments show a clear image enhancement in the full-resolution framework, with a negligible loss in the reduced-resolution space.

结合双胞胎结构与生成对抗网络的半监督遥感图像融合

[J].

Semi-supervised remote sensing image fusion method combining siamese structure with generative adversarial networks

[J].DOI:10.3724/SP.J.1089.2021.18227 URL [本文引用: 1]

Wasserstein generative adversarial networks

[C]//

Conditional generative adversarial nets

[J].

Feature-level loss for multispectral pan-sharpening with machine learning

[C]//

SDPNet:A deep network for pan-sharpening with enhanced information representation

[J].DOI:10.1109/TGRS.2020.3022482 URL [本文引用: 4]

S3:A spectral-spatial structure loss for pan-sharpening networks

[J].DOI:10.1109/LGRS.8859 URL [本文引用: 1]

Multi spectral image fusion by deep convolutional neural network and new spectral loss function

[J].DOI:10.1080/01431161.2018.1452074 URL [本文引用: 1]

Perceptual losses for real-time style transfer and super-resolution

[C]//

Pan-sharpening with color-aware perceptual loss and guided re-colorization

[C]//

A critical comparison among pansharpening algorithms

[J].DOI:10.1109/TGRS.2014.2361734 URL [本文引用: 1]

Multispectral and panchromatic data fusion assessment without reference

[J].DOI:10.14358/PERS.74.2.193 URL [本文引用: 1]