0 引言

21世纪,卫星遥感技术作为一种重要地球观测手段被广泛应用。然而,全球年平均云覆盖区域约为66%[1],意味着常规拍摄的光学卫星影像有一半以上受云遮挡难以获取有效信息,因而通过云检测技术生成掩模文件对于有效数据的筛选具有重要意义。近年来,随着卫星遥感技术的不断进步,遥感数据的规模也越来越大,据统计光学卫星影像单日接收量达数百景,传统云检测方式已难以满足大规模数据生产需求,研发快速、高效、准确的云检测方法对于保证后续遥感产品质量与服务效率至关重要[2]。目前,遥感影像云检测方法大致可以分为3种类型: 基于阈值的方法、基于传统机器学习的方法和基于卷积神经网络(convolutional neural network,CNN)的方法。基于阈值的算法常用于多光谱和高光谱图像的云检测[3⇓-5]。这类方法的基本原理是利用云与其他物体在可见光—短波红外光谱范围内的反射率差异,通过人工设计特征提取规则对云进行识别和分割[6⇓-8]。Fmask算法[3] 及其改进版本[9-10]是这类方法的典型代表,并广泛用于特定类型卫星图像的云检测应用中,包括 Landsat[8],Terra/Aqua[11],Sentinel[12],HJ-1[13]和GF-5 多光谱图像[14] 等。但是这类方法仍然存在一些不足。一是算法设计往往适用于具有多波段的特定载荷,不适用于一些只有4个波段的主流高分辨率卫星数据等; 二是基于像素计算很容易导致“椒盐”效应[15-16]; 三是阈值的设定往往依赖于专家知识,难以在复杂场景下推广应用[17-18]。

深度学习技术被逐步应用于遥感领域并取得了显著突破,涉及图像融合、图像配准、场景分类、目标检测、土地覆盖分类和地质体识别等[26-27]。与传统机器学习方法的不同在于,后者利用卷积操作可以更好地提取目标特征[28],且能够实现空间和光谱信息的融合提取。近年来,学者们尝试将深度学习技术应用于卫星影像云检测,对不同结构的网络模型的有效性进行了有益的探索[15,18,23-24,29⇓⇓-32],并针对不同类型的载荷研发了云检测方法[33⇓⇓⇓⇓-38]。然而,针对载荷参数不同的多种主流卫星影像均适用的通用型深度学习模型的研究依然有待于进一步开展。研究的主要难点在于以下3个方面: 一是获取具有代表性的多种类型卫星数据并通过标记形成训练样本集的成本较高; 二是不同载荷波段组合不一致为构建通用的深度学习模型提出了挑战; 三是深度学习模型种类众多且大多以解决计算机视觉领域问题被提出,应用于遥感数据需进行针对性优化。

在本文中,提出了一种适用于多源卫星影像的云检测模型,称为多源遥感云检测网络(multi-source remote sensing cloud detection network,MCDNet),以U型结构为基础,采用轻量化骨干网络设计,通过多尺度特征融合及门控机制提升识别精度。在全球范围内搜集多源卫星影像数据,其中即包括谷歌和Landsat等常用国外卫星数据,也包括GF-1,GF-2和 GF-5等国产卫星数据。为了保证模型的通用型,所有卫星影像均只保留真彩色3个波段用于构建云检测数据集。选取多个经典的语义分割网络作为对比开展实验,结果显示本文提出方法在云检测方面具有很好的应用潜力。

1 研究方法

1.1 MCDNet主体架构

MCDNet采用U型架构,由编码器、解码器和多尺度融合模块3部分构成(图1)。编码器由6组DCB(deep-wise convolutional block)卷积模块(深度卷积、归一化层和1×1卷积组合)构成,共包含3个下采样层,未采用更多下采样层的原因是考虑尽可能保留浅层的空间信息以提高识别结果的边界精度。DCB卷积模块由1个3×3的深度卷积和2个1×1普通卷积以及激活层组成,不同于传统轻量化模型中的瓶颈结构设计,这里处于中间位置的1×1卷积核数较大,呈现出“两头细,中间粗”的反瓶颈结构,经实验验证这种结构能够在不降低模型整体性能的前提下减少模型参数。解码器的开始部分参考了UNet [39]的设计,自下而上进行上采样的过程中用跳层连接叠加来自于编码器的特征。

图1

1.2 多尺度融合模块

CNN利用降采样操作一方面降低了模型的参数量; 另一方面使得模型具有一定深度的同时饱有足够的感受野。降采样操作的最大缺点在于采样过程导致有效信息的丢失,在识别结果上表现为目标边界识别准确性差,如早期语义分割网络(fully convolutional networks,FCN)。以降采样操作形成的多个尺度的特征相结合被证实在计算机视觉任务上是有效的。本文提出一种适用于U型架构的多尺度融合模块,使模型能够在模型训练中根据识别场景对相关的尺度特征进行加权识别性能。首先,利用常规的CBR模块(卷积层、归一化层及激活层组合)对解码器输出的各个尺度的特征进行卷积操作,再将所有特征上采样至原始输入尺寸,最后将所有特征进行堆叠,利用通道注意力模块对有效特征进行加权。

进入到通道注意力中的特征首先经过全局池化层被抽象为具有C(通道个数)个单元的向量,利用多层感知机对该向量进行非线性变换,再利用Sigmoid函数将变换后的向量映射到[0,1]范围内,此时可将向量中的每一个数值看作是对应通道的权重,利用权重向量乘以原始数据即可实现对各个通道的加权处理。具体公式为:

式中:

1.3 评价方法

采用精确率、召回率、总体分类精度、F1值及交并比作为云检测精度的评价指标。各评价参数的计算方法分别为:

P =TP/(TP+FP),

R =TP/(TP+PN),

OA =(TP+TN)/(TP+TN+FP+FN),

F1=2(1/P+1/R),

IoU =TP/(TP+FP+FN),

式中: P为精确率; R为召回率; OA为总体分类精度; F1为 F1得分; IoU为交并比; TP为判正的正样本; FN为判负的正样本; FP为判正的负样本; TN为判负的负样本。

2 多源卫星影像及数据处理

搜集了目前已开源的具有代表性的云检测数据集,包括谷歌影像、Landsat系列影像、GF-1、GF-2及GF-5卫星影像,空间分辨率覆盖0.5~30 m(表1)。由于各个数据集组织方式略有不同,在原始影像的基础上进行二次裁切,获得256像素×256像素大小的切片数据,裁切方式为固定间隔顺序裁切。由于GF-1数据量较大,为了尽可能平衡不同空间分辨率数据的数量,对GF-1的切片影像进行筛选,剔除其中无云的纯背景影像,从剩余影像中随机选择8 400个作为训练数据。最终形成的样本数据共计2万个切片(256×256×3)。

表1 多源卫星遥感影像云检测数据集

Tab.1

在进行深度学习模型训练之前需要对影像切片进行预处理,首先按照载荷类型对数据进行统计分析,剔除数据中存在的负值及异常高值,将所有数据归一化至[0,1]范围内。所有数据经随机打乱后按照6∶2∶2划分为训练集、验证集和测试集,对比实验中精度评价均基于测试集进行。

3 实验及结果分析

3.1 实验设置

本次实验中引入多个经典语义分割网络作为对比模型,包括SegNet[43],UNet[39],PSPNet (pyramid scene parsing network)[44],BiSeNet (bilateral segmentation network)[45],HRNetV2(high-resolution representation network v2)[46],DeepLabV3+[47]和MFGNet(multiscale fusion gated network)[36]。所有模型均在相同数据集以相同的训练参数设置进行实验。实验在 TensorFlow(1.13.1)框架及NVIDIA Tesla V100 GPU下进行,训练过程中使用自适应学习率优化算法(Adam)作为优化器以0.000 5为初始学习率进行优化,以“Categorical_Crossentropy”作为损失函数,所有模型经过30轮训练后取其中最优模型进行对比。

3.2 模型性能对比分析

图2展示了训练过程中模型的训练和验证精度。随着训练的进行,训练集的准确率曲线迅速上升,同时损失曲线迅速下降,20个轮次后逐渐到稳定,并且在此期间训练集和验证集的精度曲线趋势相同,表明模型没有过拟合。

图2

表2展示了各类模型在云检测任务上取得的实验结果。总的来说,所有参与实验的CNN对于云检测都是有效的,识别准确度均达到了90%以上。MCDNet在所有评估指标中均取得了最优的成绩,其中准确率达到0.97。值得一提的是,MCDNet 的召回值显著优于其他模型为0.95,表明该模型识别的假阴性率较低。F1得分综合了精确率和召回率的评价结果,可以更好地代表模型的整体性能。

表2 多源遥感影像云检测精度

Tab.2

| 模型 | P | R | F1 | OA | IoU |

|---|---|---|---|---|---|

| SegNet | 0.89 | 0.83 | 0.86 | 0.93 | 0.75 |

| PSPNet | 0.87 | 0.86 | 0.86 | 0.93 | 0.76 |

| HRNetV2 | 0.94 | 0.85 | 0.89 | 0.94 | 0.80 |

| UNet | 0.85 | 0.94 | 0.89 | 0.95 | 0.80 |

| BiSeNet | 0.84 | 0.96 | 0.89 | 0.95 | 0.81 |

| DeeplabV3+ | 0.91 | 0.90 | 0.91 | 0.95 | 0.83 |

| MFGNet | 0.91 | 0.91 | 0.91 | 0.96 | 0.84 |

| MCDNet | 0.93 | 0.95 | 0.94 | 0.97 | 0.89 |

而交并比用于检测预测结果与真实值的重合程度,对于分割任务更有说服力。MCDNet的F1得分为0.94,交并比达到0.89明显优于其他方法。总的来说,所提出的模型一方面比其他方法具有更好的性能,同时也意味着其可以在包含多种卫星数据上表现得更加鲁棒。

3.3 模型消融实验

为了进一步验证所提出模型中关键结构的有效性,以MCDNet为基准,设计3组模型消融实验(表3)。MCDNet-withoutAT代表去除了注意力机制的MCDNet,MCDNet-Xcep是将编码器替换为Xcep-tion的MCDNet,MCDNet-withoutDC为将模型中深度卷积替换为普通卷积的MCDNet。结果显示去掉模型中任意结构都会导致模型性能的下降,其中影响最大的是通道注意力融合模块,去除后模型召回率和交并比降低了3~4个百分点。

表3 MCDNet消融实验精度评价

Tab.3

| 模型 | P | R | F1 | OA | IoU |

|---|---|---|---|---|---|

| MCDNet-withoutAT | 0.92 | 0.92 | 0.92 | 0.96 | 0.85 |

| MCDNet-Xcep | 0.91 | 0.96 | 0.93 | 0.97 | 0.87 |

| MCDNet-withoutDC | 0.92 | 0.95 | 0.93 | 0.97 | 0.88 |

| MCDNet | 0.93 | 0.95 | 0.94 | 0.97 | 0.89 |

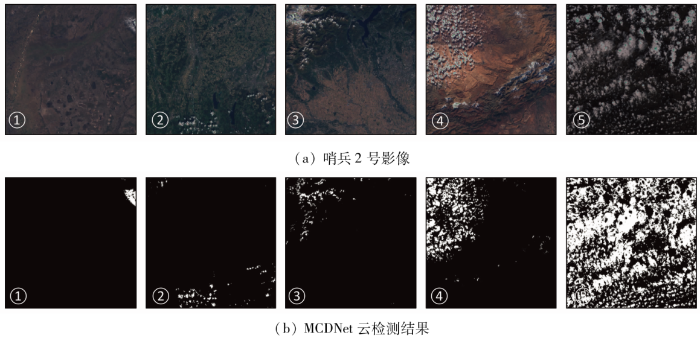

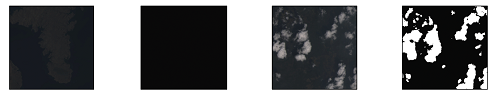

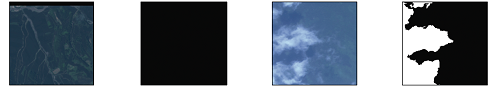

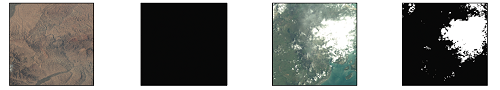

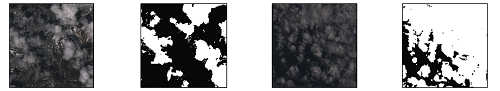

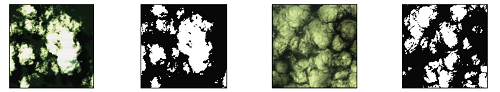

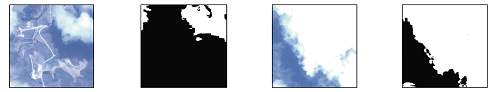

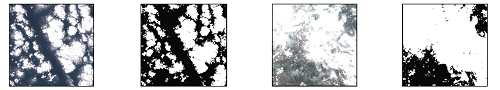

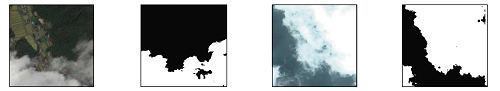

3.4 云检测效果视觉评价

为了更好地展示MCNNet在云检测任务上的优势,本文从测试数据随机选出一组覆盖各类载荷且有不同程度云覆盖的卫星影像进行测试。从表4中各类卫星影像可见,测试影像中包含沙漠、雪地、森林、农田、河流和裸地等多种地物场景,场景中云的覆盖度不同,类型也不同。利用MCDNet预测得到的云掩模结果显示MCDNet在各类场景下均可实现对云的准确识别,值得一提测试数据中包含的一些易混淆目标如雪地等也能够被准确识别,且模型对于一些薄云的判断也较为恰当,表明模型通过海量的多源卫星影像样本的训练已经充分学习到云的语义表达。

表4 不同云覆盖度的多源遥感真彩色影像及MCDNet云检测结果

Tab.4

| 卫星 | 0%云覆盖 | 20%云覆盖 | ||

|---|---|---|---|---|

| 真彩色影像 | 云检测结果 | 真彩色影像 | 云检测结果 | |

| Landsat |  | |||

| GF-5 |  | |||

| GF-2 |  | |||

| GF-1 |  | |||

| ||||

| 卫星 | 45%云覆盖 | 80%云覆盖 | ||

| 真彩色影像 | 云检测结果 | 真彩色影像 | 云检测结果 | |

| Landsat |  | |||

| GF-5 |  | |||

| GF-2 |  | |||

| GF-1 |  | |||

| ||||

3.5 模型通用性测试

构建通用云检测模型的2个关键前提分别是拥有大量的样本和通用有效的模型设计,常用光学卫星应用的侧重点不同,且数据通道数不一致,因而以往云检测模型主要是针对载荷特点进行定制开发,模型迁移性不强。本文采用RGB真彩色影像作为输入源,一方面可以最大限度提升样本数量的同时降低制作成本; 另一方面的原因是验证不同载荷数据统一为3个通道数据对于模型的设计是否有利。实验数据中既包含DN值数据也包含反射率数据,所有数据均在剔除异常值后归一化至[0,1]范围内,因而使得不同传感器获取的不同时相的数据都具有相对接近的视觉表达(图3)。而通过CNN可以从真彩色影像中云的分布规律、纹理特征和空间关系等习得用于云检测的通用语义表达能力。为了进一步验证这一结论,利用训练样本中不存在的载荷类型数据作为实验目标进行测试。将之前训练的带有权重的MCDNet模型直接应用于5景哨兵2号卫星数据进行云检测,从图3中可见,识别结果依然很准确,F1得分为0.90,交并比为0.82。

图3

图3

MCDNet在哨兵2号数据上的云检测结果

Fig.3

Cloud detection results of MCDNet on Sentinel-2 images

4 结论

本研究提出了一种多尺度特征融合神经网络模型,通过轻量化骨干网络设计结合多尺度特征融合及通道注意力机制实现了对多种类型卫星影像的高精度云检测。对比于多个经典的语义分割网络取得了最优的检测性能,得出如下结论:

1)采用真彩色影像结合语义分割网络可以实现对中高空间分辨率卫星影像高效准确的云检测。

2)本研究提出的模型在传统U型架构的基础上应用轻量化骨干设计、多尺度特征融合及注意力机制能够有效地提升模型性能,相较于经典语义分割模型具有明显优势。

3)MCDNet由全球分布的数万计样本训练而成,在多类卫星影像云检测中均实现了90%以上的识别精度,展现出较好的鲁棒性,为通用的卫星云检测模型设计提供参考。

但是,目前本研究只针对国产卫星中的GF-1,GF-2及GF-5影像开展了实验,今后的研究重点是将模型应用于更多类型的国产卫星数据,逐步优化完善尽早提出满足业务化生产需求的通用模型。

参考文献

Spatial and temporal distribution of clouds observed by MODIS onboard the Terra and Aqua satellites

[J].DOI:10.1109/TGRS.2012.2227333 URL [本文引用: 1]

Efficient solution of large-scale domestic hyperspectral data processing and geological application

[C]//

Characterization of the Landsat7 ETM+ automated cloud-cover assessment (ACCA) algorithm

[J].DOI:10.14358/PERS.72.10.1179 URL [本文引用: 2]

Object-based cloud and cloud shadow detection in Landsat imagery

[J].DOI:10.1016/j.rse.2011.10.028 URL [本文引用: 1]

Improvement and expansion of the Fmask algorithm:Cloud,cloud shadow,and snow detection for Landsats4-7,8,and Sentinel-2 images

[J].DOI:10.1016/j.rse.2014.12.014 URL [本文引用: 1]

Cloud detection using satellite measurements of infrared and visible radiances for ISCCP

[J].DOI:10.1175/1520-0442(1993)006<2341:CDUSMO>2.0.CO;2 URL [本文引用: 1]

An algorithm for snow and ice detection using AVHRR data:An extension to the APOLLO software package

[J].DOI:10.1080/01431168908903929 URL [本文引用: 1]

Global distribution of cloud cover derived from NOAA/AVHRR operational satellite data

[J].

Improving Fmask cloud and cloud shadow detection in mountainous area for Landsats4-8 images

[J].DOI:10.1016/j.rse.2017.07.002 URL [本文引用: 1]

Fmask 4.0:Improved cloud and cloud shadow detection in Landsats4-8 and Sentinel-2 imagery

[J].DOI:10.1016/j.rse.2019.05.024 URL [本文引用: 1]

An automatic method for screening clouds and cloud shadows in optical satellite time series in cloudy regions

[J].DOI:10.1016/j.rse.2018.05.024 URL [本文引用: 1]

Improvement of the Fmask algorithm for Sentinel-2 images:Separating clouds from bright surfaces based on parallax effects

[J].DOI:10.1016/j.rse.2018.04.046 URL [本文引用: 1]

Cloud and snow discrimination for CCD images of HJ-1A/B constellation based on spectral signature and spatio-temporal context

[J].DOI:10.3390/rs8010031 URL [本文引用: 1]

一种适用于高分五号全谱段光谱成像仪影像的云检测算法

[J].

Cloud detection algorithm for images of visual and infrared multispectral imager

[J].

Distinguishing cloud and snow in satellite images via deep convolutional network

[J].DOI:10.1109/LGRS.2017.2735801 URL [本文引用: 2]

Object-based convolutional neural networks for cloud and snow detection in high-resolution multispectral imagers

[J].

DOI:10.3390/w10111666

URL

[本文引用: 1]

Cloud and snow detection is one of the most significant tasks for remote sensing image processing. However, it is a challenging task to distinguish between clouds and snow in high-resolution multispectral images due to their similar spectral distributions. The shortwave infrared band (SWIR, e.g., Sentinel-2A 1.55–1.75 µm band) is widely applied to the detection of snow and clouds. However, high-resolution multispectral images have a lack of SWIR, and such traditional methods are no longer practical. To solve this problem, a novel convolutional neural network (CNN) to classify cloud and snow on an object level is proposed in this paper. Specifically, a novel CNN structure capable of learning cloud and snow multiscale semantic features from high-resolution multispectral imagery is presented. In order to solve the shortcoming of “salt-and-pepper” in pixel level predictions, we extend a simple linear iterative clustering algorithm for segmenting high-resolution multispectral images and generating superpixels. Results demonstrated that the new proposed method can with better precision separate the cloud and snow in the high-resolution image, and results are more accurate and robust compared to the other methods.

A new Landsat8 cloud discrimination algorithm using thresholding tests

[J].DOI:10.1080/01431161.2018.1506183 URL [本文引用: 1]

Cloud detection in remote sensing images based on multiscale features-convolutional neural network

[J].DOI:10.1109/TGRS.36 URL [本文引用: 2]

Precipitation estimation from remotely sensed imagery using an artificial neural network cloud classification system

[J].

DOI:10.1175/JAM2173.1

URL

[本文引用: 1]

A satellite-based rainfall estimation algorithm, Precipitation Estimation from Remotely Sensed Information using Artificial Neural Networks (PERSIANN) Cloud Classification System (CCS), is described. This algorithm extracts local and regional cloud features from infrared (10.7 μm) geostationary satellite imagery in estimating finescale (0.04° × 0.04° every 30 min) rainfall distribution. This algorithm processes satellite cloud images into pixel rain rates by 1) separating cloud images into distinctive cloud patches; 2) extracting cloud features, including coldness, geometry, and texture; 3) clustering cloud patches into well-organized subgroups; and 4) calibrating cloud-top temperature and rainfall (Tb–R) relationships for the classified cloud groups using gauge-corrected radar hourly rainfall data. Several cloud-patch categories with unique cloud-patch features and Tb–R curves were identified and explained. Radar and gauge rainfall measurements were both used to evaluate the PERSIANN CCS rainfall estimates at a range of temporal (hourly and daily) and spatial (0.04°, 0.12°, and 0.25°) scales. Hourly evaluation shows that the correlation coefficient (CC) is 0.45 (0.59) at a 0.04° (0.25°) grid scale. The averaged CC of daily rainfall is 0.57 (0.63) for the winter (summer) season.

Development of methods for mapping global snow cover using moderate resolution imaging spectroradiometer data

[J].DOI:10.1016/0034-4257(95)00137-P URL [本文引用: 1]

Introducing two random forest based methods for cloud detection in remote sensing images

[J].DOI:10.1016/j.asr.2018.04.030 URL [本文引用: 1]

A hybrid approach for fog retrieval based on a combination of satellite and ground truth data

[J].

DOI:10.3390/rs10040628

URL

[本文引用: 1]

Fog has a substantial influence on various ecosystems and it impacts economy, traffic systems and human life in many ways. In order to be able to deal with the large number of influence factors, a spatially explicit high-resoluted data set of fog frequency distribution is needed. In this study, a hybrid approach for fog retrieval based on Meteosat Second Generation (MSG) data and ground truth data is presented. The method is based on a random forest (RF) machine learning model that is trained with cloud base altitude (CBA) observations from Meteorological Aviation Routine Weather Reports (METAR) as well as synoptic weather observations (SYNOP). Fog is assumed where the model predicts CBA values below a dynamically derived threshold above the terrain elevation. Cross validation results show good accordance with observation data with a mean absolute error of 298 m in CBA values and an average Heidke Skill Score of 0.58 for fog occurrence. Using this technique, a 10 year fog baseline climatology with a temporal resolution of 15 min was derived for Europe for the period from 2006 to 2015. Spatial and temporal variations in fog frequency are analyzed. Highest average fog occurrences are observed in mountainous regions with maxima in spring and summer. Plains and lowlands show less overall fog occurrence but strong positive anomalies in autumn and winter.

Cloud classification of satellite radiance data by multicategory support vector machines

[J].DOI:10.1175/1520-0426(2004)021<0159:CCOSRD>2.0.CO;2 URL [本文引用: 2]

Development of a support vector machine based cloud detection method for MODIS with the adjustability to various conditions

[J].DOI:10.1016/j.rse.2017.11.003 URL [本文引用: 2]

Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors

[J].DOI:10.1016/j.isprsjprs.2019.02.017 URL [本文引用: 2]

Deep learning in remote sensing applications:A meta-analysis and review

[J].DOI:10.1016/j.isprsjprs.2019.04.015 URL [本文引用: 1]

3D autoencoder algorithm for lithological mapping using ZY-1 02D hyperspectral imagery:A case study of Liuyuan region

[J].

Survey of deep-learning approaches for remote sensing observation enhancement

[J].

DOI:10.3390/s19183929

URL

[本文引用: 1]

Deep Learning, and Deep Neural Networks in particular, have established themselves as the new norm in signal and data processing, achieving state-of-the-art performance in image, audio, and natural language understanding. In remote sensing, a large body of research has been devoted to the application of deep learning for typical supervised learning tasks such as classification. Less yet equally important effort has also been allocated to addressing the challenges associated with the enhancement of low-quality observations from remote sensing platforms. Addressing such channels is of paramount importance, both in itself, since high-altitude imaging, environmental conditions, and imaging systems trade-offs lead to low-quality observation, as well as to facilitate subsequent analysis, such as classification and detection. In this paper, we provide a comprehensive review of deep-learning methods for the enhancement of remote sensing observations, focusing on critical tasks including single and multi-band super-resolution, denoising, restoration, pan-sharpening, and fusion, among others. In addition to the detailed analysis and comparison of recently presented approaches, different research avenues which could be explored in the future are also discussed.

CDnet:CNN-based cloud detection for remote sensing imagery

[J].DOI:10.1109/TGRS.36 URL [本文引用: 1]

A cloud detection algorithm for satellite imagery based on deep learning

[J].

DOI:10.1016/j.rse.2019.03.039

[本文引用: 1]

Reliable detection of clouds is a critical pre-processing step in optical satellite based remote sensing. Currently, most methods are based on classifying invidual pixels from their spectral signatures, therefore they do not incorporate the spatial patterns. This often leads to misclassifications of highly reflective surfaces, such as human made structures or snow/ice. Multi-temporal methods can be used to alleviate this problem, but these methods introduce new problems, such as the need of a cloud-free image of the scene. In this paper, we introduce the Remote Sensing Network (RS-Net), a deep learning model for detection of clouds in optical satellite imagery, based on the U-net architecture. The model is trained and evaluated using the Landsat 8 Biome and SPARCS datasets, and it shows state-of-the-art performance, especially over biomes with hardly distinguishable scenery, such as clouds over snowy and icy regions. In particular, the performance of the model that uses only the RGB bands is significantly improved, showing promising results for cloud detection with smaller satellites with limited multi-spectral capabilities. Furthermore, we show how training the RS-Net models on data from an existing cloud masking method, which are treated as noisy data, leads to increased performance compared to the original method. This is validated by using the Fmask algorithm to annotate the Landsat 8 datasets, and then use these annotations as training data for regularized RS-Net models, which then show improved performance compared to the Fmask algorithm. Finally, the classification time of a full Landsat 8 product is 18.0 +/- 2.4 s for the largest RS-Net model, thereby making it suitable for production environments.

Fast cloud segmentation using convolutional neural networks

[J].

DOI:10.3390/rs10111782

URL

[本文引用: 1]

Information about clouds is important for observing and predicting weather and climate as well as for generating and distributing solar power. Most existing approaches extract cloud information from satellite data by classifying individual pixels instead of using closely integrated spatial information, ignoring the fact that clouds are highly dynamic, spatially continuous entities. This paper proposes a novel cloud classification method based on deep learning. Relying on a Convolutional Neural Network (CNN) architecture for image segmentation, the presented Cloud Segmentation CNN (CS-CNN), classifies all pixels of a scene simultaneously rather than individually. We show that CS-CNN can successfully process multispectral satellite data to classify continuous phenomena such as highly dynamic clouds. The proposed approach produces excellent results on Meteosat Second Generation (MSG) satellite data in terms of quality, robustness, and runtime compared to other machine learning methods such as random forests. In particular, comparing CS-CNN with the CLAAS-2 cloud mask derived from MSG data shows high accuracy (0.94) and Heidke Skill Score (0.90) values. In contrast to a random forest, CS-CNN produces robust results and is insensitive to challenges created by coast lines and bright (sand) surface areas. Using GPU acceleration, CS-CNN requires only 25 ms of computation time for classification of images of Europe with 508 × 508 pixels.

基于DenseNet与注意力机制的遥感影像云检测算法

[J].

Cloud detection algorithm of remote sensing image based on DenseNet and attention mechanism

[J].

Cloud and cloud shadow detection in Landsat imagery based on deep convolutional neural networks

[J].DOI:10.1016/j.rse.2019.03.007 URL [本文引用: 1]

Cloud-net+:A cloud segmentation CNN for Landsat8 remote sensing imagery optimized with filtered jaccard loss function

[J/OL].

A cloud boundary detection scheme combined with ASLIC and CNN using ZY-3,GF-1/2 satellite imagery

[J].

A cloud detection method for Landsat8 images based on PCANet

[J].DOI:10.3390/rs10060877 URL [本文引用: 2]

An effective cloud detection method for Gaofen-5 images via deep learning

[J].

DOI:10.3390/rs12132106

URL

[本文引用: 2]

Recent developments in hyperspectral satellites have dramatically promoted the wide application of large-scale quantitative remote sensing. As an essential part of preprocessing, cloud detection is of great significance for subsequent quantitative analysis. For Gaofen-5 (GF-5) data producers, the daily cloud detection of hundreds of scenes is a challenging task. Traditional cloud detection methods cannot meet the strict demands of large-scale data production, especially for GF-5 satellites, which have massive data volumes. Deep learning technology, however, is able to perform cloud detection efficiently for massive repositories of satellite data and can even dramatically speed up processing by utilizing thumbnails. Inspired by the outstanding learning capability of convolutional neural networks (CNNs) for feature extraction, we propose a new dual-branch CNN architecture for cloud segmentation for GF-5 preview RGB images, termed a multiscale fusion gated network (MFGNet), which introduces pyramid pooling attention and spatial attention to extract both shallow and deep information. In addition, a new gated multilevel feature fusion module is also employed to fuse features at different depths and scales to generate pixelwise cloud segmentation results. The proposed model is extensively trained on hundreds of globally distributed GF-5 satellite images and compared with current mainstream CNN-based detection networks. The experimental results indicate that our proposed method has a higher F1 score (0.94) and fewer parameters (7.83 M) than the compared methods.

无人工标注数据的Landsat影像云检测深度学习方法

[J].

A deep learning method for Landsat image cloud detection without manually labeled data

[J].

U-net:Convolutional networks for biomedical image segmentation

[C]//International Conference on Medical Image Computing and Computer-Assisted Intervention.

Cloud-Net:An end-to-end cloud detection algorithm for Landsat8 imagery

[C]//

A geographic information-driven method and a new large scale dataset for remote sensing cloud/snow detection

[J].DOI:10.1016/j.isprsjprs.2021.01.023 URL [本文引用: 1]

DABNet:Deformable contextual and boundary-weighted network for cloud detection in remote sensing images

[J].

SegNet:A deep convolutional encoder-decoder architecture for image segmentation

[J].DOI:10.1109/TPAMI.34 URL [本文引用: 1]

Pyramid scene parsing network

[C]//

Bisenet:Bilateral segmentation network for real-time semantic segmentation

[C]//

High-resolution representations for labeling pixels and regions

[J/OL].

Encoder-decoder with atrous separable convolution for semantic image segmentation

[C]//