0 引言

煤炭是重要的化石能源,在19世纪和20世纪显著地支撑了社会的发展。但煤炭的开采和利用会释放大量的甲烷和CO2,普遍认为这是当前全球气候变暖的主要原因之一[1]。近10 a来,全球范围内关于减少煤炭开采和使用的呼声越来越高,如G7集团于2024年宣布将在2035年前关闭各自所有燃煤发电厂。但全球每年仍然在生产约80亿t煤炭,很多国家,如中国、印度,能源仍然严重依赖于煤炭开采。为评估能源转型的进展,制定气候变化应对的策略,非常有必要监测煤矿的生产状态。

由于露天煤矿分布在全球各地,由众多的矿业公司运营,露天煤矿生产状态的公开数据很少。近20 a来,遥感技术的兴起提供了新的解决方案。有学者尝试对遥感图像开展监督分类和变化检测,来实现露天煤矿土地覆被的监测,从而提取露天煤矿开采的范围[2

露天煤矿在开采时,通常把矿岩划分成一定厚度的水平分层,自上而下逐层开采,并保持一定的超前关系,在开采过程中各工作水平在空间上构成了阶梯状,每个阶梯就是一个台阶。因此,台阶作为露天煤矿开采过程中的基本几何要素,可以有效反映露天煤矿开采范围内的生产活动。当露天煤矿生产时,露天煤矿范围内的台阶必然发生变化,如增加、移动、延长。然而,露天煤矿内部台阶数量多、变化快,如果采用浅层的遥感图像信息,如颜色、长度、光谱反射率等,难以在图像上将众多台阶分离开。因此,必须充分利用深层的遥感图像信息。目前,已经有研究采用传统卷积神经网络(convolutional neural network,CNN)利用深层遥感图像信息来监测露天煤矿的土地覆被变化,该网络在提取矿区局部特征方面能力很强,但感受野的大小往往受制于网络结构和卷积层参数的设置,难以顾及到全局的细节特征,因此这种方法对露天煤矿范围内台阶的识别能力有限。

Transformer模型由Vaswani等[8]于2017年提出,该模型摒弃了卷积和循环,基于注意力机制来实现自然语言处理。随后Dosovitskiy等[9]在Transformer的基础上提出了Vision Transformer模型并应用于图像分类,获得了优秀成绩,证明了Transformer架构在计算机视觉领域的优越性能。基于以上模型开发的SegFormer[10]是语义分割领域当中的新宠,SegFormer具有2大优势,一是通过一个分层的Transformer编码器输出多级多尺度特征,有效提高了语义分割的性能; 二是通过在解码器中引入一个轻量级多层感知器(multilayer perceptron,MLP),兼顾局部注意与全局注意的同时节省了计算开销。SegFormer的优越性能已经在很多应用中得到了表现。杨靖怡等[11]将SegFormer应用于超声影像分割,在与U-Net等模型的对比试验中获得了最佳表现; Lin等[12]将SegFormer用于滨海湿地分类研究,在复杂场景分类任务当中效果显著; Li等[13]在SegFormer的解码器中嵌入多个转置卷积采样模块,同时融合空间金字塔池解码模块,应用于建筑检测,缓解了由于细节特征缺失带来的漏检和误检现象。

本文将SegFormer引入露天煤矿复杂场景中,开发一个面向露天煤矿台阶提取的BenchSegNet模型,并检验和讨论这个方法的有效性和实用性。

1 研究区概况及数据源

1.1 研究区概况

本文将开发的模型应用到芒来露天煤矿,检验所提出方法的有效性和实用性。芒来露天煤矿位于内蒙古自治区锡林郭勒盟苏尼特左旗境内(44°02'01″~44°02'52″N,113°12'30″~113°14'26″E),矿区东北高,西南低,面积15.627 km2,资源储量4.81亿t,年产能1 000万t[16]。

1.2 数据源及其预处理

本研究数据源选择欧空局旗下的Sentinel-2影像,Sentinel-2是高分辨率多光谱成像卫星,携带1枚多光谱成像仪,其监测范围可以覆盖全球,重访周期为5 d。Sentinel-2影像应用广泛,同时相对于其他公开影像,其空间分辨率更高,影像更清晰,信息细节更丰富[17]。本文中主要获取位于中国北方露天煤矿的Sentinel-2可见光和近红外波段影像,其空间分辨率为10 m,数据处理等级为L2A,该级别影像是经过大气校正的大气底层反射率数据。获取数据后,对影像进行了裁剪、掩模等预处理工作。

2 研究方法

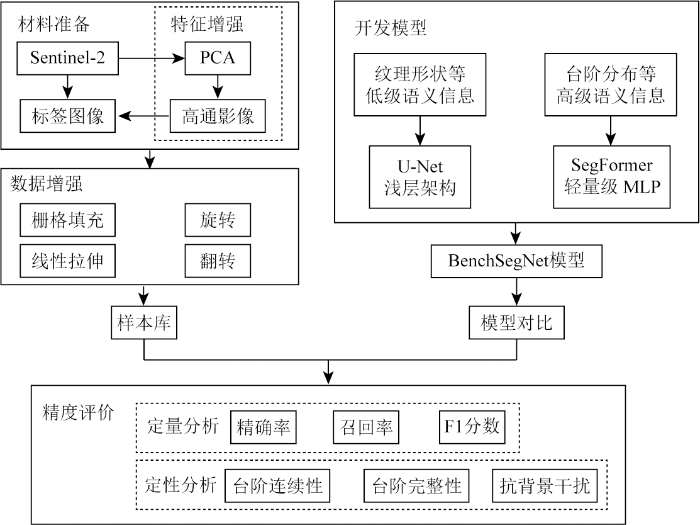

本文的技术框架如图1所示,共分为4部分:

图1

①利用主成分分析(principal component analysis,PCA)和高通滤波对Sentinel-2影像进行增强,然后绘制台阶的标签; ②对训练样本进行优化处理,包括栅格填充、线性拉伸,同时为了扩展数据集进行旋转、翻转等数据增强操作; ③基于U-Net浅层架构和SegFormer,开发针对露天煤矿台阶提取的深度学习模型BenchSegNet; ④利用精确率、召回率、F1分数等指标评估BenchSegNet的表现,并与其他算法比较。

2.1 样本库构建

台阶是露天煤矿中垂直方向上的最小开采单元,台阶往往呈现为阶梯状,每个台阶由邻接的平盘与坡面2部分组成。在一个台阶内部,由于平盘倾角较小,而坡面倾角较大,传感器的入射方向所接收到的来自平盘反射的电磁波能量相较于坡面反射更多,因此在遥感影像中平盘会呈现出更高的反射率,表现为明亮条纹,同时,坡面则表现为黑色暗线。考虑到坡面的影像宽度远远小于平盘,很难从影像上同时提取平盘和坡面。因此,在制作台阶的标签时,本文将连接上下2个坡面的平盘作为台阶。

原始影像的台阶特征并不明显,因此需要通过特征增强操作以突出平盘和坡面的差异。实验发现对Sentinel-2的4个10 m空间分辨率波段进行PCA变换和高通滤波后可以得到较好的结果。同时为了兼顾台阶的光谱特征等信息,本文在联合原始影像和特征增强结果的基础上进行标签的绘制和BenchSegNet的训练,其他模型仅以特征增强影像作为模型输入。

目前,在露天煤矿遥感领域中,还没有开源的台阶数据集。本文基于以上内容,通过手工标注的方式制作了露天煤矿台阶数据集,并将图像和标签统一裁剪至256像素×256像素,对不满足大小的样本进行栅格填充。对样本进行数据增强有利于缓解模型的过拟合问题[18]。本文采用旋转和翻转对数据集进行扩展,最终共获得1 640组训练样本。训练集、验证集和测试集依据8∶1∶1的比例分配,样本分配区域均无重叠。

2.2 BenchSegNet模型

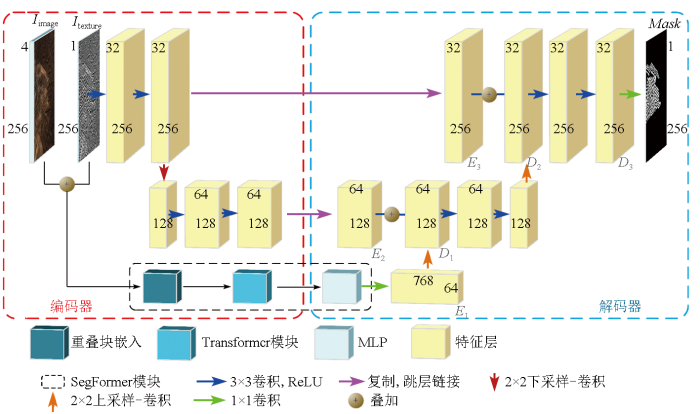

本文设计的露天矿区台阶提取模型BenchSegNet如图2所示。该模型为编码器-解码器架构,以SegFormer为基础模型,通过组合U-Net完成基本架构,在编码器中除输入端Itexture外新增了一个仅链接SegFormer架构的输入端Iimage,在解码器中,为了使SegFormer输出结果同U-Net进行匹配,在通过SegFormer模块后,添加多次卷积和上采样操作将SegFormer提取的高级语义信息同U-Net浅层编码器输出的浅层纹理特征进行融合,最终达到增强模型纹理特征学习能力的目的。

图2

假设一组影像包含原始遥感影像和纹理特征影像: {Iimage∈

首先在编码器部分,将Iimage和Itexture融合为{H×W×(C1+C2)}的多通道图像Iconcat,将其输入到SegFormer模块中处理,经1×1的卷积操作后输出对应大小为{

接着在解码器部分,将

最后使

2.3 正样本加权值损失函数

露天煤矿中台阶仅占少部分,内部未开采区域及煤矿外围都属于背景信息。由于数据集中负样本比正样本占比更多,即正负样本极度不均衡,为了得到更加准确的露天煤矿台阶提取结果,本文所使用的损失函数L为带正样本加权的二元交叉熵损失(LBCE)。因此,最终的损失函数L定义为:

L=p·yln(σ(x))+(1-y)ln(1-σ(x)),

式中: x为模型输出值; y为标签值; σ为激活函数; p为正样本加权值,用于平衡因负样本过多导致模型精准率过高而召回率过低的情况。

2.4 精度评价

露天煤矿台阶提取可以看作是一种二分类任务,然而露天煤矿台阶在遥感影像中表现为密集精细线性目标,具有不规则的线性纹理特征,且正样本数占比极少,仅依靠单一的精度评价方法是远远不够的。为了准确评估算法,本文采用准确率、精确率、召回率和F1分数作为评价指标。准确率用以评估模型对所有样本的测试精度,反映了模型正确预测正负样本的能力。精确率用于衡量模型对预测的正样本的准确程度,精确率越高,说明在被预测为正的样本中,真实标签也为正的概率越大。召回率用于计算正确预测的台阶像素与实际台阶的百分比。F1分数为以上两者的综合,能反映模型的总体预测能力。具体计算公式为:

式中: A为准确率; P为精确率; R为召回率; F1为F1分数; TP为正样本预测为正(真阳性)的样本数; FP为负样本预测为正(假阳性)的样本数; TN为负样本预测为负(真阴性)的样本数; FN为正样本预测为负(假阴性)的样本数。

3 实验结果与分析

3.1 基于BenchSegNet的台阶提取效果

本文中所有实验均在Pytorch框架下运行,Pytorch版本2.1,硬件基于NVIDIA GeForce RTX 3060 6 GB运行,以RMSprop和AdamW作为优化器,批大小为4,训练轮数为10轮,学习率为0.000 1。SegFormer模块骨干网络为MiT-B3。

为了验证本文提出的BenchSegNet在露天煤矿台阶提取任务中的有效性,测试了其他几种常用方法,其中包括传统机器学习方法中的随机森林(random forest,RF)、支持向量机(support vector machine,SVM),以及深度学习方法中的U-Net[19]、带有空洞空间金字塔池化模块(atrous spatial pyramid pooling,ASPP)的ASPP-UNet和SegFormer,各模型均采用LBCE作为损失函数用于模型训练。

U-Net是一种传统的CNN,由全卷积神经网络(fully convolutional networks,FCN)改进而来,最初被用于医学影像分割任务,但其独特的跳跃连接设计使U-Net在精细目标识别和上下文组合方面的表现优于FCN,因而被广泛应用于其他领域; ASPP-UNet是一种带有DeepLabV3+[20]中提出的ASPP 模块的U-Net模型,可优化不同尺度下特征图的分割效果; SegFormer是一种简单高效的语义分割模型,主要由Transformer模块和轻量级MLP模块组成。由于原有的SegFormer模型无法正常训练,本文使用的SegFormer在最后一个全连接层之前增加了2次转置卷积操作。

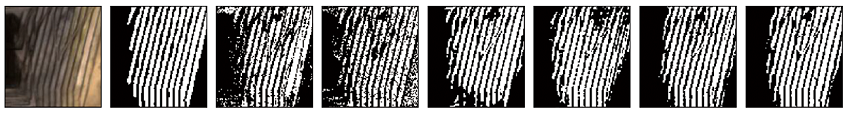

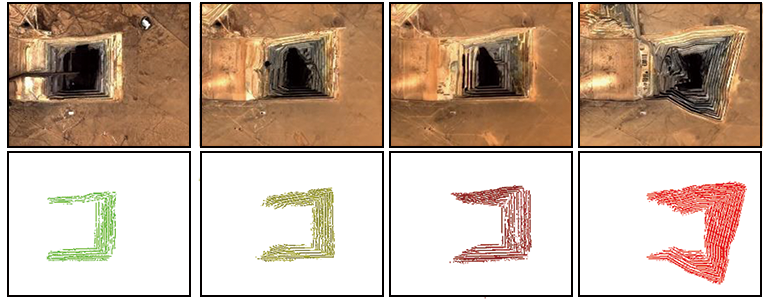

各算法的露天煤矿台阶提取结果如表1所示。SVM和RF的综合提取结果较差,这2种方法为传统的机器学习方法,其仅基于像元和波段信息进行分类,产生了大量噪点,误检和漏检较多。U-Net作为一个传统的CNN,能够有效提取部分露天煤矿台阶,且对于规则明显的部分提取效果较好,但对于部分大尺度的台阶容易出现漏检的现象,且对于部分小尺度的台阶出现误检的现象,在弯曲型台阶上的表现甚至不如传统机器学习方法。ASPP-UNet相较于U-Net对于大尺度台阶的提取效果有较大改善,但部分台阶仍没有被完整提取,存在间断问题。SegFormer相较于传统CNN提取的信息更加全面,错分数目有效减少,但是其对于台阶细节的表现没有传统CNN好。BenchSegNet相较于其他所有方法,不论是提取的台阶完整性,还是提取的准确率,都达到了最好的表现。

表1 基于不同方法的露天煤矿台阶提取结果对比

Tab.1

| 台阶 | 影像 | 标签 | RF | SVM | U-Net | ASPP-UNet | SegFormer | BenchSegNet |

|---|---|---|---|---|---|---|---|---|

| 直线型 台阶 |  | |||||||

| 弯曲型 台阶 |  | |||||||

| 折线型 台阶 |  | |||||||

通过精度评价指标进一步评估不同算法的性能,如表2所示。总体来看,所有方法的准确率都高于93%,这是因为负样本在整体样本中占有很大比例,而负样本的识别率很大。BenchSegNet在精确率、召回率和F1分数上都取得了最佳表现,分别为80.93%,73.47%和77.02%。相较于SegFormer,BenchSegNet在精确率、召回率和F1分数上分别提升了6.19百分点、4.09百分点和5.06百分点; 相较于传统CNN,BenchSegNet在3种指标上提升接近10百分点; 相较于机器学习算法,BenchSegNet在3种指标上提升接近15百分点。因此,BenchSegNet的架构设计能够结合CNN和SegFormer的优点,有效提高露天煤矿台阶提取的精度和鲁棒性。

表2 不同方法的精度评价指标对比

Tab.2

| 方法 | 精确率 | 召回率 | F1分数 | 准确率 |

|---|---|---|---|---|

| RF | 64.56 | 63.33 | 63.94 | 93.71 |

| SVM | 65.32 | 59.11 | 62.06 | 94.60 |

| U-Net | 66.77 | 66.01 | 66.39 | 96.02 |

| ASPP-UNet | 66.16 | 69.46 | 67.77 | 96.12 |

| SegFormer | 74.74 | 69.38 | 71.96 | 97.20 |

| BenchSegNet | 80.93 | 73.47 | 77.02 | 97.69 |

3.2 消融实验

BenchSegNet相较于SegFormer主要有2个变动,一是增加了一个仅链接SegFormer架构的模型输入端用于提取原始影像Iimage的光谱信息,二是通过与U-Net进行组合,增强了局部信息的细节特征提取能力。本节消融实验继续以LBCE作为损失函数,精确率、召回率和F1分数作为评价指标,实验结果如表3所示。

表3 消融实验结果

Tab.3

| 方法 | 精确率 | 召回率 | F1分数 |

|---|---|---|---|

| SegFormer | 74.74 | 69.38 | 71.96 |

| SegFormer+Iimage | 78.04 | 68.11 | 72.74 |

| SegFormer+U-Net | 76.27 | 72.35 | 74.26 |

| SegFormer+Iimage+U-Net(BenchSegNet) | 80.93 | 73.47 | 77.02 |

1)Iimage输入端口的有效性。在本节实验中,额外增加了Iimage作为模型的输入端,并链接到SegFormer架构而不是链接到U-Net架构。这是因为SegFormer具有更强的泛化特征提取能力,而U-Net则因难以适应特征输入量的几何级增长以及可能的过拟合等问题而降低模型的实用性。由表3可知,增加了Iimage作为信息输入以后,模型的精确率提高了3.3百分点,这是因为通过光谱特征的引入提高了特征空间的维度进而提高了对台阶的辨识能力,但与此同时模型的召回率降低了1.27百分点,这可能是由于新特征的引入增加了模型的判断成本。总体上,模型的F1分数增加了0.78百分点,这证明了增加Iimage作为输入端口的有效性。

2)U-Net配件的有效性。将U-Net架构融合进SegFormer后,模型的精确率、召回率和F1分数分别提高了1.53百分点、2.97百分点和2.3百分点。可以发现,U-Net架构的引入全方面增强了模型的表现效果,有效弥补了SegFormer在台阶细节特征提取能力上的不足,证明了引入U-Net架构的有效性。

3)2个模块的有效性。在同时引入Iimage输入端和U-Net配件后,模型的精确率、召回率和F1分数分别提高了6.19百分点、4.09百分点和5.06百分点。结合上述内容,这表明Iimage输入端和U-Net配件不仅实现了影像特征的有效利用,还提高了模型的细节特征提取能力,进而提高了露天矿区台阶的识别能力,这反映了本实验在SegFormer基础上所加2个模块的有效性。

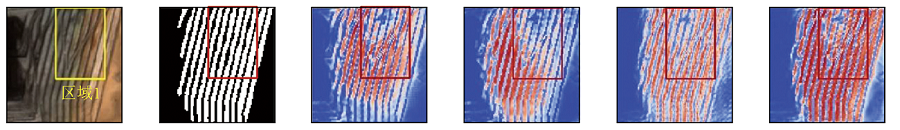

3.3 模型应用结果

为了测试模型的实用性,本文选取北方防沙带芒来露天煤矿为研究区,在2022—2023年间对其进行了4期连续观测。台阶提取结果如表4所示,可以看出煤矿的大部分台阶都被正确提取,提取率在90%以上,基本反映了煤矿的台阶开采情况,同时台阶提取结果较为完整、连续,没有出现较大的间断现象。从表4可以看出2022年3月—2023年3月这段时间煤矿基本保持了正东方向的扩张趋势,同时台阶新增数目基本相同,说明这一段时间内产能较为稳定。但在2023年3月—2023年11月这段时间内台阶数目飞速扩张,同时煤矿在东南方向也进行了新的扩张,以上内容表明该煤矿生产规模在短时间内得到了巨大提升。可以看出,本文开发的BenchSegNet模型可以用于露天煤矿台阶的时序监测,反映露天煤矿的扩张过程以及采矿活动发生的强度和位置。

表4 芒来露天煤矿2022—2023年台阶提取结果

Tab.4

| 类型 | 2022年3月 | 2022年11月 | 2023年3月 | 2023年11月 |

|---|---|---|---|---|

芒来煤 矿影像 台阶提 取结果 |  | |||

4 实验讨论

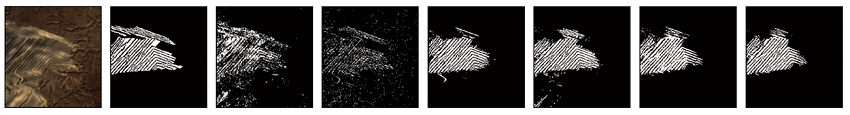

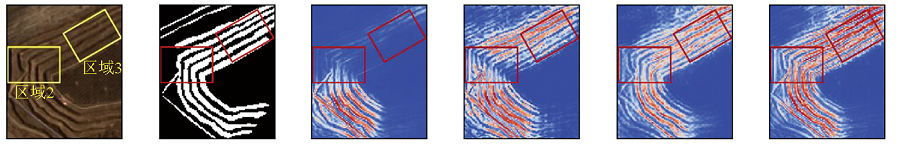

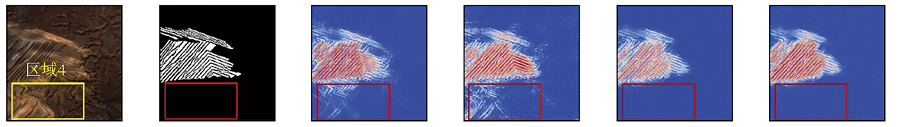

为进一步分析本文提出的BenchSegNet模型在露天煤矿台阶提取任务中的性能,通过解码层绘制各模型的置信度热力图(表5)。

表5 每个模型解码器产生的置信度热力图

Tab.5

| 台阶 | 影像 | 标签 | U-Net | ASPP-UNet | SegFormer | BenchSegNet |

|---|---|---|---|---|---|---|

| 直线型台阶 |  | |||||

| 弯曲型台阶 |  | |||||

| 折线型台阶 |  | |||||

| 图例 |  | |||||

整体上看,U-Net对图像中的精细纹理部分置信度大,但其在部分大尺度区域置信度不高; ASPP-UNet在添加ASPP模块后提高了大尺度区域的置信度; SegFormer在此基础上,提升了难分区域的置信度,但在一些精细纹理部分置信度反而降低。而BenchSegNet有效增强了模型在台阶区域上的置信度,结合了U-Net浅层架构和SegFormer模块的优势,有效提取矿区台阶位置。

在区域1,各模型的提取结果均出现了因出入沟导致的间断现象,而BenchSegNet最大程度排除了露天矿区台阶的出入沟影响,增加了台阶的置信度; 在区域2,传统CNN无法精准分辨出露天矿区台阶的转折和中断的区别,SegFormer对该情况有较大改善,而BenchSegNet在此基础上增强了转折处的置信度; 在区域3,除了U-Net之外,各模型在注意力机制的加持下对于模糊的难分区域具有较好表现,而BenchSegNet能够进一步增强置信度,证明了其具有较好的鲁棒性; 最后在区域4,SegFormer比传统的CNN更能有效排除露天煤矿台阶周围的干扰,而BenchSegNet不仅做到了排除干扰,还增强了识别的置信度,更加证明了其在语义信息提取和上下文结合方面的优势。

从实验结果来看,BenchSegNet具有较强的露天煤矿台阶提取能力,它使用Sentinel-2数据,这颗卫星具有全球覆盖能力,重访周期为5 d,空间分辨率为10 m,因此,BenchSegNet和Sentinel-2影像可以用于全球露天煤矿台阶的近实时监测。同时,提取的台阶信息可以用于反映露天煤矿内部生产活动的动态,从而为露天煤矿的监管、能源转型的评估、环境影响的评价提供有力数据。

然而本文模型依旧存在一些问题,比如总体精度不高,台阶内部存在空洞,在外部仍有少量像素被误检等。此外,模型输入端存在2个接口,包括原始影像和特征增强结果,需要进一步优化以减少操作程序。模型可以从以下方面进行改进: ①采用更高空间分辨率的光学影像,这可解决原始影像所提供的有效特征不足的问题; ②将面向对象方法与深度学习技术融合,这可有效应对台阶内部的空洞问题,同时也能减少背景点的误检情况; ③融合高光谱影像、合成孔径雷达、激光雷达等多源数据,综合利用多维度特征进行台阶的有效提取。

5 结论

本文提出了一种基于Sentinel-2影像和BenchSegNet的露天煤矿台阶提取方法,可以为研究露天煤矿内部生产情况、环境影响评价提供技术支持。针对露天煤矿台阶,本文首先设计了台阶标注规则并完成了样本集的制作,然后在SegFormer的基础上设计了一个专门面向露天煤矿台阶提取的模型BenchSegNet。在与其他方法的对比中,BenchSegNet的精度最高,这主要是因为BenchSegNet一方面继承了SegFormer强大的泛化性能,可以有效应对露天煤矿复杂场景; 另一方面通过引入U-Net浅层框架,使得BenchSegNet优化输出结果,能够弥补更多的细节信息损失。最后的消融实验则充分体现了对SegFormer所做改变的有效性。BenchSegNet模型和Sentinel-2影像数据在全球露天煤矿生产活动的近实时监测方面具有很强潜力,未来还可以在多源遥感数据的融合、全球露天煤矿台阶标签影像数据库的建立等方面进一步改进。

参考文献

Individual coal mine methane emissions constrained by eddy covariance measurements:Low bias and missing sources

[J].

基于改进DenseNet网络的多源遥感影像露天开采区智能提取方法

[J].

DOI:10.11873/j.issn.1004-0323.2020.3.0673

[本文引用: 1]

利用遥感技术对露天开采区进行信息提取和监测已成为解决矿山自然环境问题的重要手段。通过改进带密集连接的全卷积神经网络,构建露天开采区样本库,并训练了针对多源遥感数据的露天开采区提取模型,最终实现对铜陵地区露天开采区的全自动提取。与传统分类方法和深度学习方法相比,该方法在基于像元和基于对象的评价方面具有较好的精度,其中像元精度PA:0.977,交并比IoU:0.721,综合评价指标F1:0.838,Kappa系数:0.825,召回率:0.913,漏警率:0.087,虚警率:0.533。同时,该模型对于匀色较差的GoogleEarth影像也有较好的提取效果,表现出较强的泛化性和适用性,在多源遥感影像露天开采区提取方面具有较强的应用价值。

Opencast mining area intelligent extraction method for multi-source remote sensing image based on improved DenseNet

[J].

基于DeepLabv3+与GF-2高分辨率影像的露天煤矿区土地利用分类

[J].

Recognition of land use on open-pit coal mining area based on DeepLabv3+ and GF-2 high-resolution images

[J].

A lightweight convolutional neural network based on dense connection for open-pit coal mine service identification using the edge-cloud architecture

[J].

Open-pit mining area extraction from high-resolution remote sensing images based on EMANet and FC-CRF

[J].Rapid and accurate identification of open-pit mining areas is essential for guiding production planning and assessing environmental impact. Remote sensing technology provides an effective means for open-pit mine boundary identification. In this study, an effective method for delineating an open-pit mining area from remote sensing images is proposed, which is based on the deep learning model of the Expectation-Maximizing Attention Network (EMANet) and the fully connected conditional random field (FC-CRF) algorithm. First, ResNet-34 was applied as the backbone network to obtain preliminary features. Second, the EMA mechanism was used to enhance the learning of important information and details in the image. Finally, a postprocessing program based on FC-CRF was introduced to optimize the initial prediction results. Meanwhile, the extraction effect of MobileNetV3, U-Net, fully convolutional network (FCN), and our method were compared on the same data set for the open-pit mining areas. The advantage of the model is verified by the visual graph results, and the accuracy evaluation index based on the confusion matrix calculation. pixel accuracy (PA), mean intersection over union (MIoU), and kappa were 98.09%, 89.48%, and 88.48%, respectively. The evaluation results show that this method effectively identifies open-pit mining areas. It is of practical significance to complete the extraction task of open-pit mining areas accurately and comprehensively, which can be used for production management and environmental protection of open-pit mines.

Large-scale foundation model enhanced few-shot learning for open-pit minefield extraction

[J].

Open-pit mine area mapping with Gaofen-2 satellite images using U-net+

[J].

Attention is all you need

[C]//Advances in Neural Information Processing Systems 30(NIPS 2017).

An image is worth 16x16 words:Transformers for image recognition at scale

[J/OL].

SegFormer:Simple and efficient design for semantic segmentation with Transformers

[J/OL].

基于SegFormer的超声影像图像分割

[J].

Ultrasonic image segmentation based on SegFormer

[J].

Semantic segmentation of China’s coastal wetlands based on Sentinel-2 and SegFormer

[J].

DOI:10.3390/rs15153714

URL

[本文引用: 1]

Concerning the ever-changing wetland environment, the efficient extraction of wetland information holds great significance for the research and management of wetland ecosystems. China’s vast coastal wetlands possess rich and diverse geographical features. This study employs the SegFormer model and Sentinel-2 data to conduct a wetland classification study for coastal wetlands in Yancheng, Jiangsu, China. After preprocessing the Sentinel data, nine classification objects (construction land, Spartina alterniflora (S. alterniflora), Suaeda salsa (S. salsa), Phragmites australis (P. australis), farmland, river system, aquaculture and tidal falt) were identified based on the previous literature and remote sensing images. Moreover, mAcc, mIoU, aAcc, Precision, Recall and F-1 score were chosen as evaluation indicators. This study explores the potential and effectiveness of multiple methods, including data image processing, machine learning and deep learning. The results indicate that SegFormer is the best model for wetland classification, efficiently and accurately extracting small-scale features. With mIoU (0.81), mAcc (0.87), aAcc (0.94), mPrecision (0.901), mRecall (0.876) and mFscore (0.887) higher than other models. In the face of unbalanced wetland categories, combining CrossEntropyLoss and FocalLoss in the loss function can improve several indicators of difficult cases to be segmented, enhancing the classification accuracy and generalization ability of the model. Finally, the category scale pie chart of Yancheng Binhai wetlands was plotted. In conclusion, this study achieves an effective segmentation of Yancheng coastal wetlands based on the semantic segmentation method of deep learning, providing technical support and reference value for subsequent research on wetland values.

Method of building detection in optical remote sensing images based on SegFormer

[J].

DOI:10.3390/s23031258

URL

[本文引用: 1]

An appropriate detection network is required to extract building information in remote sensing images and to relieve the issue of poor detection effects resulting from the deficiency of detailed features. Firstly, we embed a transposed convolution sampling module fusing multiple normalization activation layers in the decoder based on the SegFormer network. This step alleviates the issue of missing feature semantics by adding holes and fillings, cascading multiple normalizations and activation layers to hold back over-fitting regularization expression and guarantee steady feature parameter classification. Secondly, the atrous spatial pyramid pooling decoding module is fused to explore multi-scale contextual information and to overcome issues such as the loss of detailed information on local buildings and the lack of long-distance information. Ablation experiments and comparison experiments are performed on the remote sensing image AISD, MBD, and WHU dataset. The robustness and validity of the improved mechanism are demonstrated by control groups of ablation experiments. In comparative experiments with the HRnet, PSPNet, U-Net, DeepLabv3+ networks, and the original detection algorithm, the mIoU of the AISD, the MBD, and the WHU dataset is enhanced by 17.68%, 30.44%, and 15.26%, respectively. The results of the experiments show that the method of this paper is superior to comparative methods such as U-Net. Furthermore, it is better for integrity detection of building edges and reduces the number of missing and false detections.

我国露天煤矿开采工艺及装备研究现状与发展趋势

[J].

Research status and development trend of mining technology and equipment in open-pit coal mine in China

[J].

北方防沙带典型县域生态安全格局研究

[J].

Ecological security pattern of typical counties in northern sand prevention belts

[J].

Landslide mechanism and stability of an open-pit slope:The Manglai open-pit coal mine

[J].

Sentinel-2 data for land cover/use mapping:A review

[J].

DOI:10.3390/rs12142291

URL

[本文引用: 1]

The advancement in satellite remote sensing technology has revolutionised the approaches to monitoring the Earth’s surface. The development of the Copernicus Programme by the European Space Agency (ESA) and the European Union (EU) has contributed to the effective monitoring of the Earth’s surface by producing the Sentinel-2 multispectral products. Sentinel-2 satellites are the second constellation of the ESA Sentinel missions and carry onboard multispectral scanners. The primary objective of the Sentinel-2 mission is to provide high resolution satellite data for land cover/use monitoring, climate change and disaster monitoring, as well as complementing the other satellite missions such as Landsat. Since the launch of Sentinel-2 multispectral instruments in 2015, there have been many studies on land cover/use classification which use Sentinel-2 images. However, no review studies have been dedicated to the application of ESA Sentinel-2 land cover/use monitoring. Therefore, this review focuses on two aspects: (1) assessing the contribution of ESA Sentinel-2 to land cover/use classification, and (2) exploring the performance of Sentinel-2 data in different applications (e.g., forest, urban area and natural hazard monitoring). The present review shows that Sentinel-2 has a positive impact on land cover/use monitoring, specifically in monitoring of crop, forests, urban areas, and water resources. The contemporary high adoption and application of Sentinel-2 can be attributed to the higher spatial resolution (10 m) than other medium spatial resolution images, the high temporal resolution of 5 days and the availability of the red-edge bands with multiple applications. The ability to integrate Sentinel-2 data with other remotely sensed data, as part of data analysis, improves the overall accuracy (OA) when working with Sentinel-2 images. The free access policy drives the increasing use of Sentinel-2 data, especially in developing countries where financial resources for the acquisition of remotely sensed data are limited. The literature also shows that the use of Sentinel-2 data produces high accuracies (>80%) with machine-learning classifiers such as support vector machine (SVM) and Random forest (RF). However, other classifiers such as maximum likelihood analysis are also common. Although Sentinel-2 offers many opportunities for land cover/use classification, there are challenges which include mismatching with Landsat OLI-8 data, a lack of thermal bands, and the differences in spatial resolution among the bands of Sentinel-2. Sentinel-2 data show promise and have the potential to contribute significantly towards land cover/use monitoring.

ImageNet classification with deep convolutional neural networks

[J].

DOI:10.1145/3065386

URL

[本文引用: 1]

We trained a large, deep convolutional neural network to classify the 1.2 million high-resolution images in the ImageNet LSVRC-2010 contest into the 1000 different classes. On the test data, we achieved top-1 and top-5 error rates of 37.5% and 17.0%, respectively, which is considerably better than the previous state-of-the-art. The neural network, which has 60 million parameters and 650,000 neurons, consists of five convolutional layers, some of which are followed by max-pooling layers, and three fully connected layers with a final 1000-way softmax. To make training faster, we used non-saturating neurons and a very efficient GPU implementation of the convolution operation. To reduce overfitting in the fully connected layers we employed a recently developed regularization method called \"dropout\" that proved to be very effective. We also entered a variant of this model in the ILSVRC-2012 competition and achieved a winning top-5 test error rate of 15.3%, compared to 26.2% achieved by the second-best entry.

U-Net:Convolutional networks for biomedical image segmentation

[C]//

DeepLab:Semantic image segmentation with deep convolutional nets,atrous convolution,and fully connected CRFs

[J].DOI:10.1109/TPAMI.2017.2699184 URL [本文引用: 1]