|

|

|

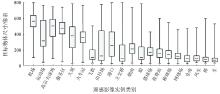

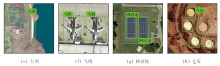

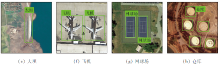

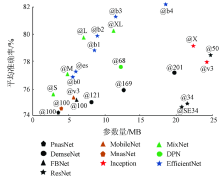

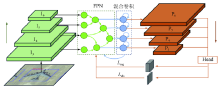

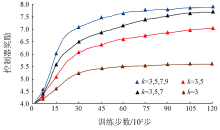

Abstract With the concept of “smart city”, remote sensing target detection has gradually become an important way for town planning, construction and maintenance. In order to characterize the differentiating remote sensing features of different cities and solve the problem of uneven generalization ability in the model on objects of different scales, this paper proposes a pyramid structure search method based on hybrid separation and convolution. Firstly, this paper analyzes the spatial distribution characteristics of the remote sensing image dataset, also constructs a multi-receptive field hybrid convolution search space based on its characteristics, and then trains the weights in its sub-network. Secondly, the number and structure of feature extraction units are searched cyclically with the help of reinforcement learning algorithms for the convergent loss value sequence. Finally, when the architecture reward function is stable, the corresponding architecture parameters and weight matrix are fixed, so that the cross-scale information of the image can be adaptively fused on the test data to improve the positioning accuracy of similar targets at different resolutions. The average accuracy of the network searched by this method on the DIOR remote sensing dataset is 78.6%, which is 6 percentage points higher than that of CornerNet, 1.6 percentage points higher than that of Cascade R-CNN, and the accuracy of small objects is 2.1 percentage points higher than that of Cascade R-CNN. The optimization ability of multi-scale architecture search in remote sensing target detection was confirmed.

|

| Keywords

deep learning

remote sensing detection

architectural search

image pyramid

recall

|

|

|

|

Corresponding Authors:

LIAO Tiejun

E-mail: 1371566711@qq.com;ltjhy-007@163.com

|

|

Issue Date: 23 December 2020

|

|

|

| [1] |

Krizhevsky A, Sutskever I, Hinton G. ImageNet classification with deep convolutional neural networks[C]. Proceedings of the 25th International Conference on Neural Information Processing Systems. ACM, 2012(1):1097-1105.

|

| [2] |

张翠平, 苏光大. 人脸识别技术综述[J]. 中国图象图形学报, 2000,5(11):885-894.

|

| [2] |

Zhang C P, Su G D. Overview of face recognition technology[J]. Journal of Image and Graphics, 2000,5(11):885-894.

|

| [3] |

张达峰, 刘宇红, 张荣芬. 基于深度学习的智能辅助驾驶系统[J]. 电子科技, 2018,31(10):64-67.

|

| [3] |

Zhang D F, Liu Y H, Zhang R F. Intelligent driving assistance system based on deep learning[J]. Electronic Science and Technology, 2018,31(10):64-67.

|

| [4] |

李昊轩. 基于深度学习的医疗图像分割[J]. 电子制作, 2019,369(4):55-57.

|

| [4] |

Li H X. Medical image segmentation based on deep learning[J]. Electronic Production, 2019,369(4):55-57.

|

| [5] |

Lin T Y, Piotr D, Girshick R, et al. Feature pyramid networks for object detection[EB/OL]. (2017-04-19)[2019-12-24]. https://arxiv.org/abs/1612.03144.

url: https://arxiv.org/abs/1612.03144

|

| [6] |

Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2016:2818-2826.

|

| [7] |

Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2018:7132-7141.

|

| [8] |

He K, Zhang X, Ren S, et al. Deep residual learning for image reco-gnition[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2016:770-778.

|

| [9] |

Huang G, Liu Z, Van Der Maaten L, et al. Densely connected convo-lutional networks[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2017:4700-4708.

|

| [10] |

Liu S, Qi L, Qin H, et al. Path aggregation network for instance segmentation[C]// 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR). IEEE, 2018.

|

| [11] |

Kong T, Sun F, Yao A, et al. RON:Reverse connection with objectness prior networks for object detection[EB/OL].(2017-07-06)[2019-12-24]. https://arxiv.org/abs/1707.01691.

url: https://arxiv.org/abs/1707.01691

|

| [12] |

Kim S W, Kook H K, Sun J Y, et al. Parallel feature pyramid network for object detection[C]// Proceedings of the European Conference on Computer Vision(ECCV), 2018:234-250.

|

| [13] |

Tan M, Le Q V. Efficientnet:Rethinking model scaling for convolutional neural networks[EB/OL]. (2019-11-23)[2019-12-24]. https://arxiv.org/abs/1905.11946.

url: https://arxiv.org/abs/1905.11946

|

| [14] |

Zoph B, Le Q V. Neural architecture search with reinforcement learning[EB/OL]. [2019-12-24]. https://arxiv.org/pdf/1611.01578.pdf.

url: https://arxiv.org/pdf/1611.01578.pdf

|

| [15] |

Tan M, Chen B, Pang R, et al. Mnasnet:Platform-aware neural architecture search for mobile[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2019:2820-2828.

|

| [16] |

Wu B, Dai X, Zhang P, et al. Fbnet:Hardware-aware efficient convnet design via differentiable neural architecture search[C]// Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2019:10734-10742.

|

| [17] |

Tan M, Le Q V. MixNet:Mixed depthwise convolutional kernels[EB/OL]. (2019-12-01)[2019-12-24]. https://arxiv.org/abs/1907.09595.

url: https://arxiv.org/abs/1907.09595

|

| [18] |

Deng J, Dong W, Socher R, et al. Imagenet:A large-scale hierarchical image database[C]// 2009 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2009:248-255.

|

| [19] |

Liu C, Zoph B, Neumann M, et al. Progressive neural architecture search[EB/OL]. (2018-07-26)[2019-12-24]. https://arxiv.org/abs/1712.00559.

url: https://arxiv.org/abs/1712.00559

|

| [20] |

Ghiasi G, Lin T Y, Pang R, et al. NAS-FPN:Learning scalable feature pyramid architecture for object detection[EB/OL]. (2019-04-16)[2019-12-24]. https://arxiv.org/abs/1904.07392.

url: https://arxiv.org/abs/1904.07392

|

| [21] |

Li K, Wan G, Cheng G, et al. Object detection in optical remote sensing images:A survey and a new benchmark[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2019,159(2):296-307.

|

| [22] |

Howard A G, Zhu M, Chen B, et al. MobileNets:Efficient convolutional neural networks for mobile vision applications[EB/OL]. (2017-04-17)[2019-12-24]. https://arxiv.org/abs/1704.04861.

url: https://arxiv.org/abs/1704.04861

|

| [23] |

Rosenfeld A, Tsotsos J K. Incremental learning through deep adaptation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020,42(3):651-663.

pmid: 30507526

url: https://www.ncbi.nlm.nih.gov/pubmed/30507526

|

| [24] |

Howard A, Sandler M, Chu G, et al. Searching for MobileNetV3[J]. (2019-11-20)[2019-12-24]. https://arxiv.org/abs/1905.02244.

url: https://arxiv.org/abs/1905.02244

|

| [25] |

Szegedy C, Liu W, Jia Y Q, et al. Going deeper with convolutions[C]// 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2015,15523970.

|

| [26] |

Ioffe S, Szegedy C. Batch normalization:Accelerating deep network training by reducing internal covariate shift[C]// International Conference on International Conference on Machine Learning, 2015.

|

| [27] |

Szegedy C, Ioffe S, Vanhoucke V, et al. Inception-v4,Inception-ResNet and the impact of residual connections on learning[EB/OL]. (2016-08-23)[2019-12-24]. https://arxiv.org/abs/1602.07261.

url: https://arxiv.org/abs/1602.07261

|

| [28] |

Chen Y, Li J, Xiao H, et al. Dual path networks[EB/OL]. (2017-08-01)[2019-12-24]. https://arxiv.org/abs/1707.01629.

url: https://arxiv.org/abs/1707.01629

|

| [29] |

Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017(99):2999-3007.

|

| [30] |

Ramachandran P, Zoph B, Quoc V L. Searching for activation functions[EB/OL]. (2017-10-27)[2019-12-24]. https://arxiv.org/abs/1710.05941.

url: https://arxiv.org/abs/1710.05941

|

| [31] |

Chen X L, Fang H, Lin T Y, et al. Microsoft COCO captions:Data collection and evaluation server[EB/OL]. (2015-04-03)[2019-12-24]. https://arxiv.org/abs/1504.00325.

url: https://arxiv.org/abs/1504.00325

|

| [32] |

Everingham M, Gool L V, Williams C K I, et al. The pascal visual object classes (VOC) challenge[J]. International Journal of Computer Vision, 2010,88(2):303-338.

|

| [33] |

Redmon J, Farhadi A. YOLOv3:An incremental improvement[J]. (2018-04-08)[2019-12-24]. https://arxiv.org/abs/1804.02767.

url: https://arxiv.org/abs/1804.02767

|

| [34] |

Ren S, He K, Girshick R, et al. Faster R-CNN:Towards real-time object detection with region proposal networks[C]// Advances in Neural Information Processing Systems. 2015:91-99.

|

| [35] |

Law H, Deng J. CornerNet:Detecting objects as paired keypoints[J]. International Journal of Computer Vision, 2020,128:642-656.

|

| [36] |

Cai Z, Vasconcelos N. Cascade R-CNN:Delving into high quality object detection[EB/OL]. (2017-12-03)[2019-12-24]. https://arxiv.org/abs/1712.00726.

url: https://arxiv.org/abs/1712.00726

|

| [37] |

Tian Z, Shen C, Chen H, et al. Fcos:Fully convolutional one-stage object detection[C]// Proceedings of the IEEE International Conference on Computer Vision. IEEE, 2019:9627-9636.

|

| [38] |

金永涛, 杨秀峰, 高涛, 等. 基于面向对象与深度学习的典型地物提取[J]. 国土资源遥感, 2018,30(1):22-29.doi: 10.6046/gtzyyg.2018.01.04.

|

| [38] |

Jin Y T, Yang X F, Gao T, et al. The typical object extraction method based on object-oriented and deep learning[J]. Remote Sensing for Land and Resources, 2018,30(1):22-29.doi: 10.6046/gtzyyg.2018.01.04.

|

|

Viewed |

|

|

|

Full text

|

|

|

|

|

Abstract

|

|

|

|

|

Cited |

|

|

|

|

| |

Shared |

|

|

|

|

| |

Discussed |

|

|

|

|

2020,

Vol. 32

2020,

Vol. 32

Issue (4)

: 53-60

DOI: 10.6046/gtzyyg.2020.04.08

Issue (4)

: 53-60

DOI: 10.6046/gtzyyg.2020.04.08

), LIAO Tiejun(

), LIAO Tiejun( )

)